Glue Package In R Download

Wrangling Data in the Tidyverse

In the last course we spent a ton of time talking about all the most common ways data are stored and reviewed how to get them into a tibble (or data.frame) in R.

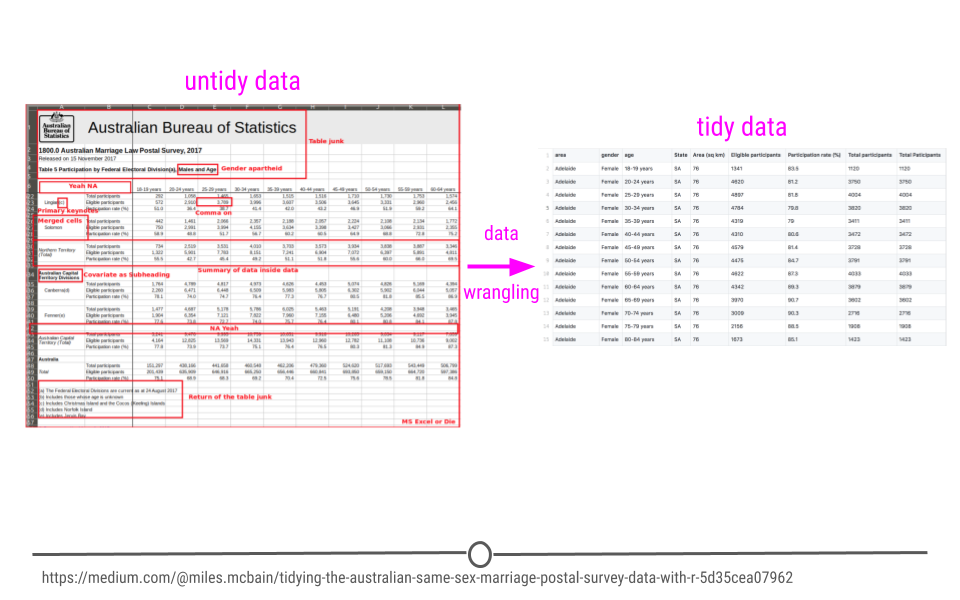

So far we've discussed what tidy and untidy data are. We've (hopefully) convinced you that tidy data are the right type of data to work with. What we may not have made perfectly clear yet is that data are not always the tidiest when they come to you at the start of a project. An incredibly important skill of a data scientist is to be able to take data from an untidy format and get it into a tidy format. This process is often referred to as data wrangling. Generally, data wranglings skills are those that allow you to wrangle data from the format they're currently in into the tidy format you actually want them in.

Beyond data wrangling, it's also important to make sure the data you have are accurate and what you need to answer your question of interest. After wrangling the data into a tidy format, there is often further work that has to be done to clean the data.

About This Course

Data never arrive in the condition that you need them in order to do effective data analysis. Data need to be re-shaped, re-arranged, and re-formatted, so that they can be visualized or be inputted into a machine learning algorithm. This course addresses the problem of wrangling your data so that you can bring them under control and analyze them effectively. The key goal in data wrangling is transforming non-tidy data into tidy data.

This course covers many of the critical details about handling tidy and non-tidy data in R such as converting from wide to long formats, manipulating tables with the dplyr package, understanding different R data types, processing text data with regular expressions, and conducting basic exploratory data analyses. Investing the time to learn these data wrangling techniques will make your analyses more efficient, more reproducible, and more understandable to your data science team.

In this specialization we assume familiarity with the R programming language. If you are not yet familiar with R, we suggest you first complete R Programming before returning to complete this course.

Data wrangling example

Tidy Data Review

Before we move any further, let's review the requirements for a tidy dataset:

- Each variable is stored in a column

- Each observation is stored in a row

- Each cell stores a single value

We had four tidy data principles in an earlier lesson, where the fourth was that each table should store a single type of information. That's less critical here, as we'll be working at first with single datasets, so let's just keep those three tidy data principles at the front of our minds.

Reshaping Data

Tidy data generally exist in two forms: wide data and long data. Both types of data are used and needed in data analysis, and fortunately, there are tools that can take you from wide-to-long format and from long-to-wide format. This makes it easy to work with any tidy dataset. We'll discuss the basics of what wide and long data are and how to go back and forth between the two in R. Getting data into the right format will be crucial later when summarizing data and visualizing it.

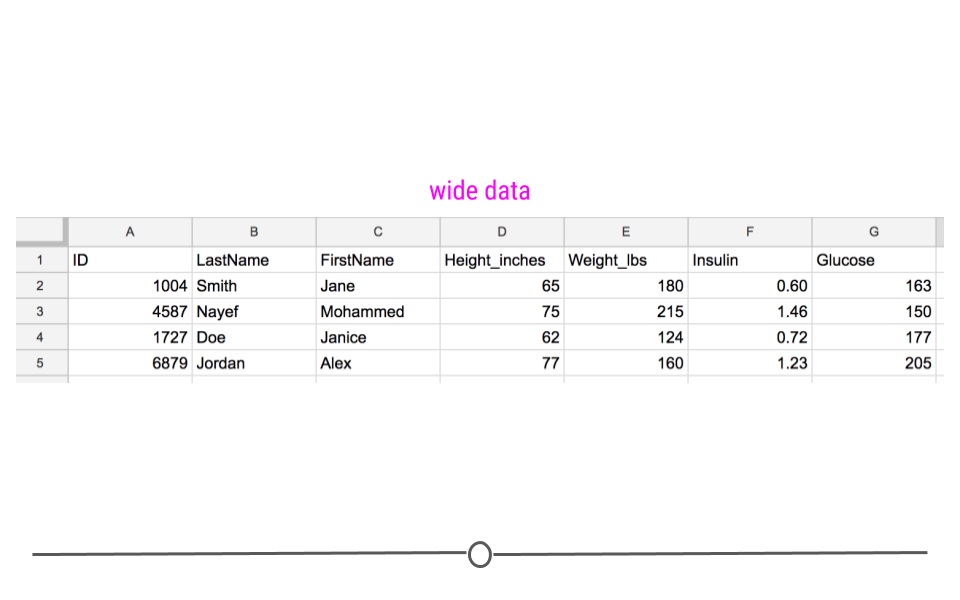

Wide Data

Wide data has a column for each variable and a row for each observation. Data are often entered and stored in this manner. This is because wide data are often easy to understand at a glance. For example, this is a wide dataset:

Wide dataset

Up until this point, we would have described this dataset as a rectangular, tidy dataset. With the additional information just introduced, we can also state that it is a wide dataset. Here, you can clearly see what measurements were taken for each individual and can get a sense of how many individuals are contained in the dataset.

Specifically, each individual is in a different row with each variable in a different column. At a glance we can quickly see that we have information about four different people and that each person was measured in four different ways.

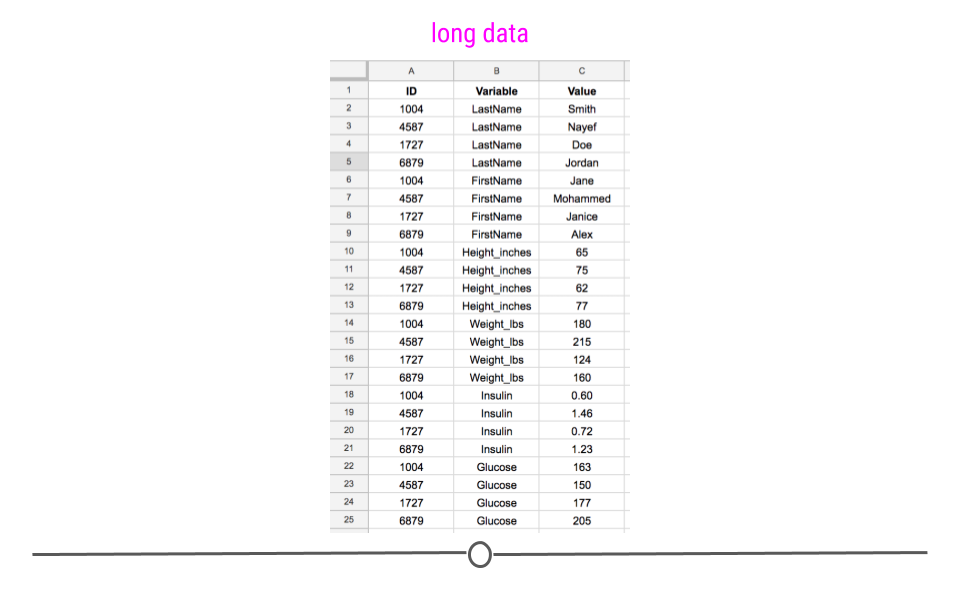

Long Data

Long data, on the other hand, has one column indicating the type of variable contained in that row and then a separate row for the value for that variable. Each row contains a single observation for a single variable. It's still a tidy datasets, but the information is stored in a long format:

Long dataset

This long dataset includes the exact same information as the previous wide dataset; it is just stored differently. It's harder to see visually how many different measurements were taken and on how many different people, but the same information is there.

While long data formats are less readable than wide data at a glance, they are often a lot easier to work with during analysis. Most of the tools we'll be working with use long data. Thus, to go from how data are often stored (wide) to working with the data during analysis (long), we'll need to understand what tools are needed to do this and how to work with them.

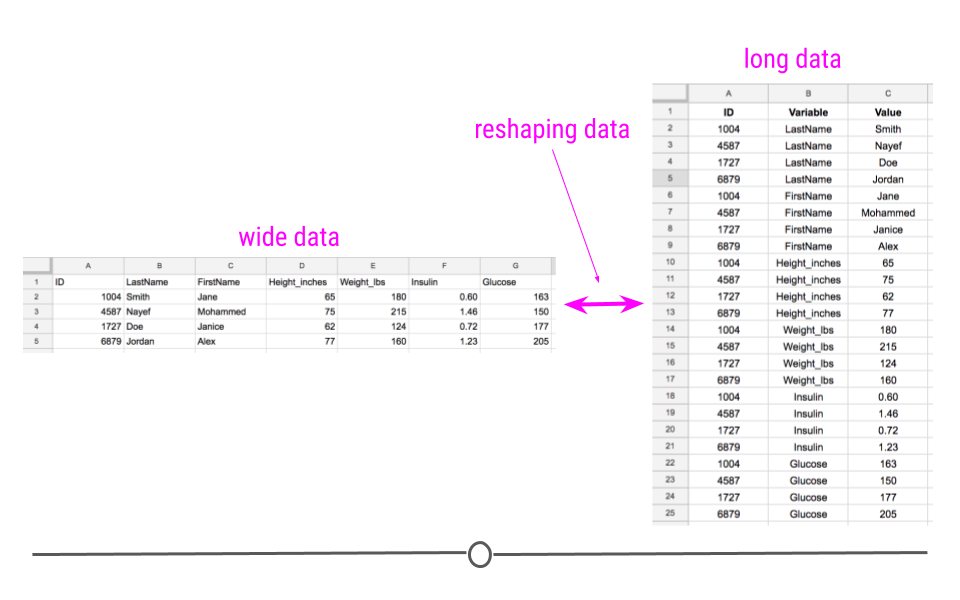

Reshaping the Data

Converting your data from wide-to-long or from long-to-wide data formats is referred to as reshaping your data.

Reshaping data

Within the tidyverse, tidyr is the go-to package for accomplishing this task. Within the tidyr package, you'll have to become familiar with a number of functions. The two most pertinent to reshaping data are: pivot_wider() and pivot_longer().

For these examples, we'll work with the airquality dataset available in R. The data in this dataset includes "Daily air quality measurements in New York, May to September 1973." This is a wide dataset because each day is in a separate row and there are multiple columns with each including information about a different variable (ozone, solar.r, wind, temp, month, and day).

We'll load in the tidyverse, so that we can convert this data.frame to a tibble and see the first few lines of this dataset using the following code:

library(tidyverse) airquality <- as_tibble(airquality) airquality ## # A tibble: 153 × 6 ## Ozone Solar.R Wind Temp Month Day ## <int> <int> <dbl> <int> <int> <int> ## 1 41 190 7.4 67 5 1 ## 2 36 118 8 72 5 2 ## 3 12 149 12.6 74 5 3 ## 4 18 313 11.5 62 5 4 ## 5 NA NA 14.3 56 5 5 ## 6 28 NA 14.9 66 5 6 ## 7 23 299 8.6 65 5 7 ## 8 19 99 13.8 59 5 8 ## 9 8 19 20.1 61 5 9 ## 10 NA 194 8.6 69 5 10 ## # … with 143 more rows Again, wide data are easy to decipher at a glance. We can see that we have six different variables for each day, with each one of these variables (measurements) being stored in a separate column.

tidyr

The tidyr package is part of the tidyverse, so its functionality is available to you since you've loaded in the tidyverse. The two main functions we mentioned above will help you reshape your data in the following ways:

-

pivot_longer(): go from wide data to long data -

pivot_wider(): go from long data to wide data

To get started, you'll need to be sure that the tidyr package is installed and loaded into your RStudio session.

pivot_longer()

As data are often stored in wide formats, you'll likely use pivot_longer() a lot more frequently than you'll use pivot_wider(). This will allow you to get the data into a long format that will be easy to use for analysis.

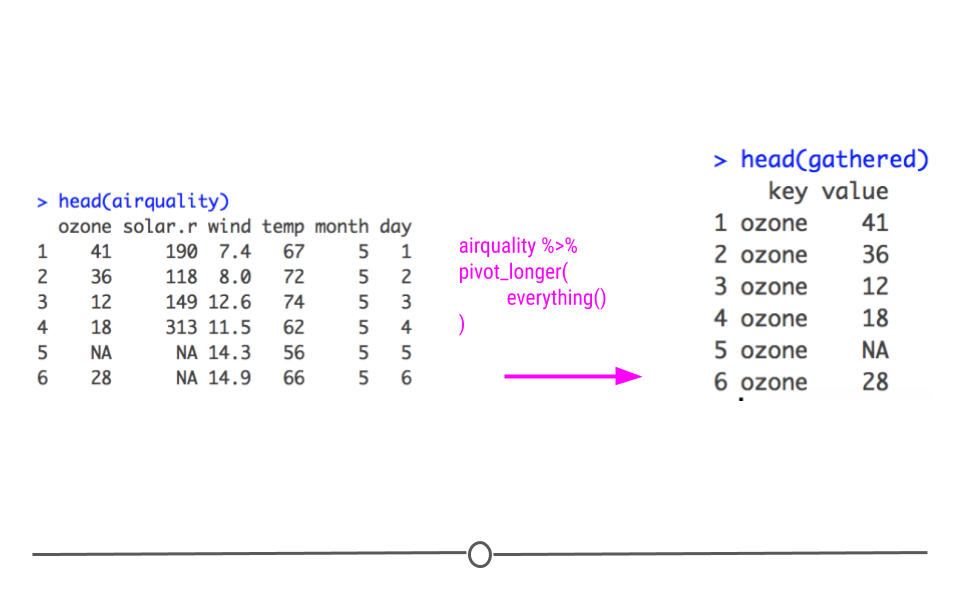

In tidyr, pivot_longer() will take the airquality dataset from wide to long, putting each column name into the first column and each corresponding value into the second column. Here, the first column will be called name. The second column will still be value.

## use pivot_longer() to reshape from wide to long gathered <- airquality %>% pivot_longer(everything()) ## take a look at first few rows of long data gathered ## # A tibble: 918 × 2 ## name value ## <chr> <dbl> ## 1 Ozone 41 ## 2 Solar.R 190 ## 3 Wind 7.4 ## 4 Temp 67 ## 5 Month 5 ## 6 Day 1 ## 7 Ozone 36 ## 8 Solar.R 118 ## 9 Wind 8 ## 10 Temp 72 ## # … with 908 more rows

Longer dataset

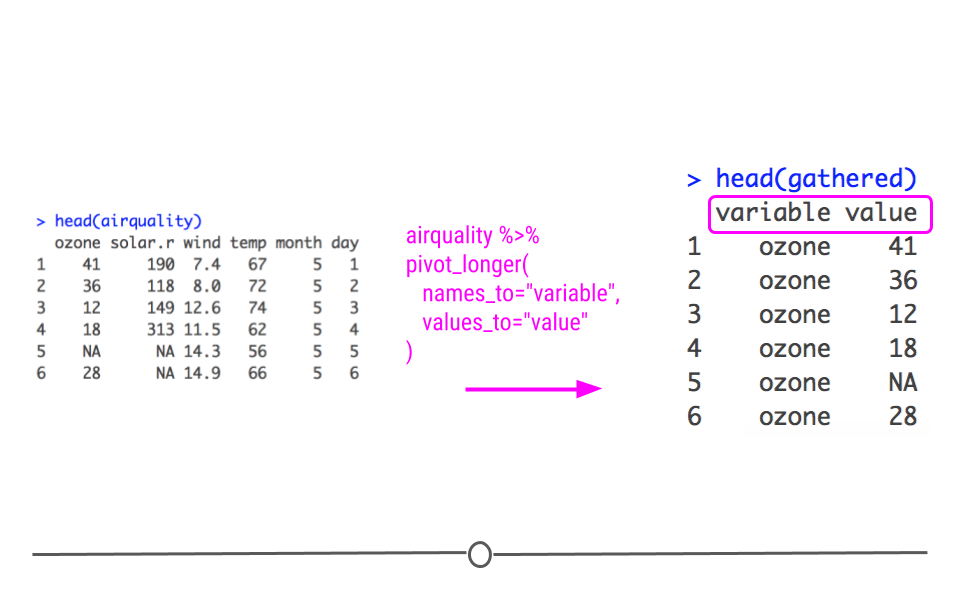

However, it's very easy to change the names of these columns within pivot_longer(). To do so you specify what the names_to and values_to columns names should be within pivot_longer():

## to rename the column names that gather provides, ## change key and value to what you want those column names to be gathered <- airquality %>% pivot_longer(everything(), names_to = "variable", values_to = "value") ## take a look at first few rows of long data gathered ## # A tibble: 918 × 2 ## variable value ## <chr> <dbl> ## 1 Ozone 41 ## 2 Solar.R 190 ## 3 Wind 7.4 ## 4 Temp 67 ## 5 Month 5 ## 6 Day 1 ## 7 Ozone 36 ## 8 Solar.R 118 ## 9 Wind 8 ## 10 Temp 72 ## # … with 908 more rows

gather column names changed

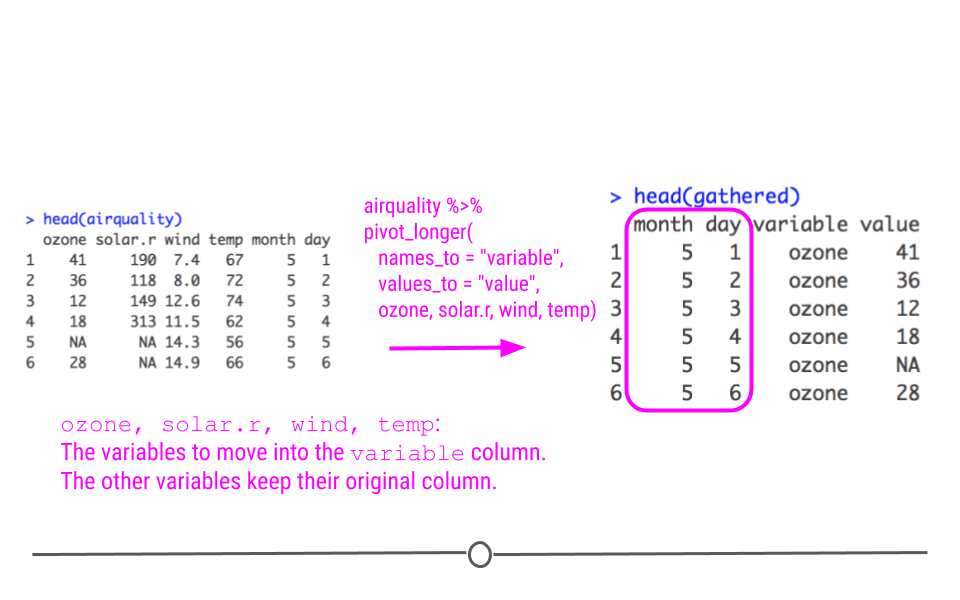

However, you're likely not interested in your day and month variable being separated out into their own variables within the variable column. In fact, knowing the day and month associated with a particular data point helps identify that particular data point. To account for this, you can exclude day and month from the variables being included in the variable column by specifying all the variables that you do want included in the variable column. Here, that means specifying Ozone, Solar.R, Wind, and Temp. This will keep Day and Month in their own columns, allowing each row to be identified by the specific day and month being discussed.

## in pivot_longer(), you can specify which variables ## you want included in the long format ## it will leave the other variables as is gathered <- airquality %>% pivot_longer(c(Ozone, Solar.R, Wind, Temp), names_to = "variable", values_to = "value") ## take a look at first few rows of long data gathered ## # A tibble: 612 × 4 ## Month Day variable value ## <int> <int> <chr> <dbl> ## 1 5 1 Ozone 41 ## 2 5 1 Solar.R 190 ## 3 5 1 Wind 7.4 ## 4 5 1 Temp 67 ## 5 5 2 Ozone 36 ## 6 5 2 Solar.R 118 ## 7 5 2 Wind 8 ## 8 5 2 Temp 72 ## 9 5 3 Ozone 12 ## 10 5 3 Solar.R 149 ## # … with 602 more rows

gather specifying which variables to include in long format

Now, when you look at the top of this object, you'll see that Month and Day remain in the data frame and that variable combines information from the other columns in airquality (Ozone, Solar.R, Wind, Temp). This is still a long format dataset; however, it has used Month and Day as IDs when reshaping the data frame.

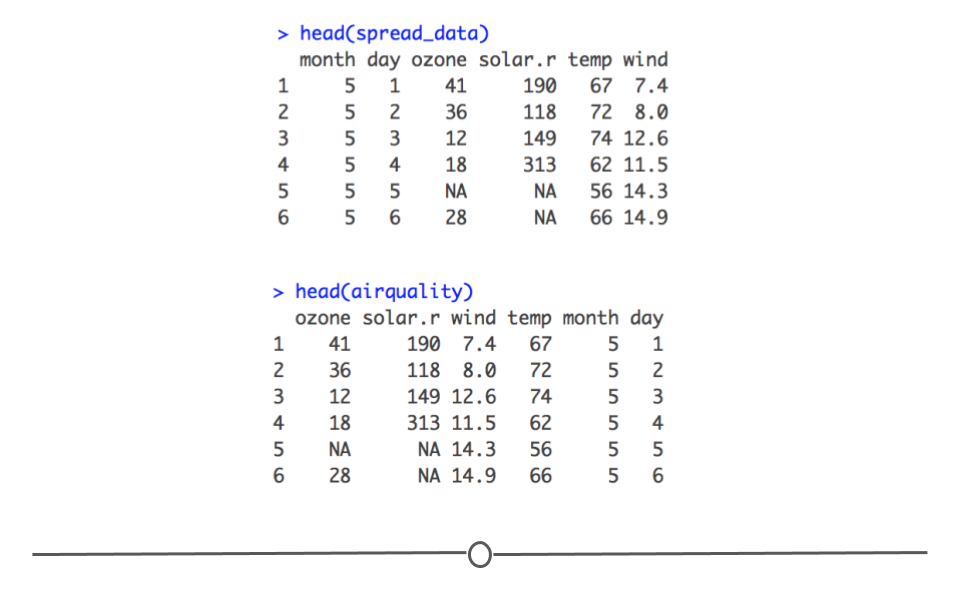

pivot_wider()

To return your long data back to its original form, you can use pivot_wider(). Here you specify two columns: the column that contains the names of what your wide data columns should be (names_from) and the column that contains the values that should go in these columns (values_from). The data frame resulting from pivot_wider() will have the original information back in the wide format (again, the columns will be in a different order). But, we'll discuss how to rearrange data in the next lesson!

## use pivot_wider() to reshape from long to wide spread_data <- gathered %>% pivot_wider(names_from = "variable", values_from = "value") ## take a look at the wide data spread_data ## # A tibble: 153 × 6 ## Month Day Ozone Solar.R Wind Temp ## <int> <int> <dbl> <dbl> <dbl> <dbl> ## 1 5 1 41 190 7.4 67 ## 2 5 2 36 118 8 72 ## 3 5 3 12 149 12.6 74 ## 4 5 4 18 313 11.5 62 ## 5 5 5 NA NA 14.3 56 ## 6 5 6 28 NA 14.9 66 ## 7 5 7 23 299 8.6 65 ## 8 5 8 19 99 13.8 59 ## 9 5 9 8 19 20.1 61 ## 10 5 10 NA 194 8.6 69 ## # … with 143 more rows ## compare that back to the original airquality ## # A tibble: 153 × 6 ## Ozone Solar.R Wind Temp Month Day ## <int> <int> <dbl> <int> <int> <int> ## 1 41 190 7.4 67 5 1 ## 2 36 118 8 72 5 2 ## 3 12 149 12.6 74 5 3 ## 4 18 313 11.5 62 5 4 ## 5 NA NA 14.3 56 5 5 ## 6 28 NA 14.9 66 5 6 ## 7 23 299 8.6 65 5 7 ## 8 19 99 13.8 59 5 8 ## 9 8 19 20.1 61 5 9 ## 10 NA 194 8.6 69 5 10 ## # … with 143 more rows

spread data

While reshaping data may not read like the most exciting topic, having this skill will be indispensable as you start working with data. It's best to get these skills down pat early!

Data Wrangling

Once you've read your data into R and have it in the appropriately wide- or long-format, it's time to wrangle the data, so that it is in the appropriate format and includes the information you need.

R Packages

While there are tons of R packages out there to help you work with data, we're going to cover the packages and functions within those packages that you'll absolutely want and need to work with when working with data.

dplyr

There is a package specifically designed for helping you wrangle your data. This package is called dplyr and will allow you to easily accomplish many of the data wrangling tasks necessary. Like tidyr, this package is a core package within the tidyverse, and thus it was loaded in for you when you ran library(tidyverse) earlier. We will cover a number of functions that will help you wrangle data using dplyr:

-

%>%- pipe operator for chaining a sequence of operations -

glimpse()- get an overview of what's included in dataset -

filter()- filter rows -

select()- select, rename, and reorder columns -

rename()- rename columns -

arrange()- reorder rows -

mutate()- create a new column -

group_by()- group variables -

summarize()- summarize information within a dataset -

left_join()- combine data across data frame -

tally()- get overall sum of values of specified column(s) or the number of rows of tibble -

count()- get counts of unique values of specified column(s) (shortcut ofgroup_by()andtally()) -

add_count()- add values ofcount()as a new column -

add_tally()- add value(s) oftally()as a new column

tidyr

We will also return to the tidyr package. The same package that we used to reshape our data will be helpful when wrangling data. The main functions we'll cover from tidyr are:

-

unite()- combine contents of two or more columns into a single column -

separate()- separate contents of a column into two or more columns

janitor

The third package we'll include here is the janitor package. While not a core tidyverse package, this tidyverse-adjacent package provides tools for cleaning messy data. The main functions we'll cover from janitor are:

-

clean_names()- clean names of a data frame -

tabyl()- get a helpful summary of a variable -

get_dupes()- identify duplicate observations

If you have not already, you'll want to be sure this package is installed and loaded:

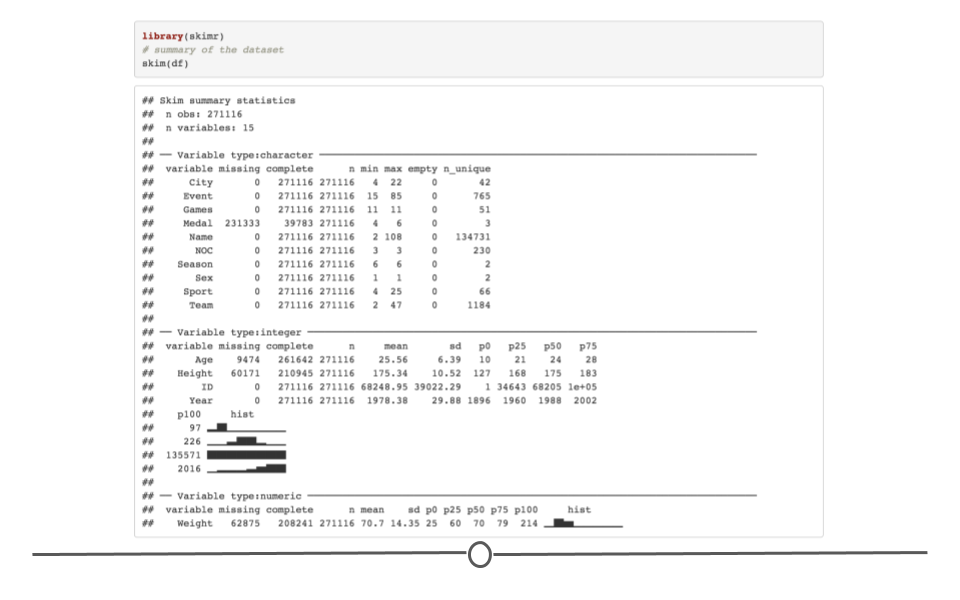

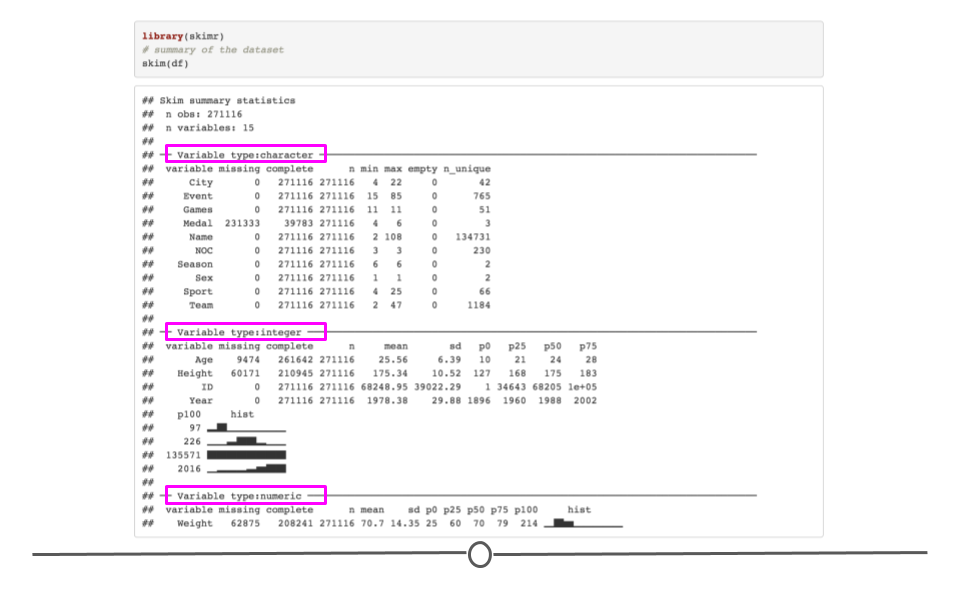

#install.packages('janitor') library(janitor) skimr

The final package we'll discuss here is the skimr package. This package provides a quick way to summarize a data.frame or tibble within the tidy data framework. We'll discuss its most useful function here:

-

skim()- summarize a data frame

If you have not already, you'll want to be sure this package is installed and loaded:

#install.packages('skimr') library(skimr) The Pipe Operator

Before we get into the important functions within dplyr, it will be very useful to discuss what is known as the pipe operator. The pipe operator looks like this in R: %>%. Whenever you see the pipe %>%, think of the word "then," so if you saw the sentence "I went to the the store and %>% I went back to my house," you would read this as I went to the store and then I went back to my house. The pipe tells you to do one thing and then do another.

Generally, the pipe operator allows you to string a number of different functions together in a particular order. If you wanted to take data frame A and carry out function B on it in R, you could depict this with an arrow pointing from A to B:

A –> B

Here you are saying, "Take A and then feed it into function B."

In base R syntax, what is depicted by the arrow above would be carried out by calling the function B on the data frame object A:

B(A) Alternatively, you could use the pipe operator (%>%):

A %>% B However, often you are not performing just one action on a data frame, but rather you are looking to carry out multiple functions. We can again depict this with an arrow diagram.

A –> B –> C –> D

Here you are saying that you want to take data frame A and carry out function B, then you want to take the output from that and then carry out function C. Subsequently you want to take the output of that and then carry out function D. In R syntax, we would first apply function B to data frame A, then apply function C to this output, then apply function D to this output. This results in the following syntax that is hard to read because multiple calls to functions are nested within each other:

D(C(B(A))) Alternatively, you could use the pipe operator. Each time you want take the output of one function and carry out something new on that output, you will use the pipe operator:

A %>% B %>% C %>% D And, even more readable is when each of these steps is separated out onto its own individual line of code:

A %>% B %>% C %>% D While both of the previous two code examples would provide the same output, the one below is more readable, which is a large part of why pipes are used. It makes your code more understandable to you and others.

Below we'll use this pipe operator a lot. Remember, it takes output from the left hand side and feeds it into the function that comes after the pipe. You'll get a better understanding of how it works as you run the code below. But, when in doubt remember that the pipe operator should be read as then.

Filtering Data

When working with a large dataset, you're often interested in only working with a portion of the data at any one time. For example, if you had data on people from ages 0 to 100 years old, but you wanted to ask a question that only pertained to children, you would likely want to only work with data from those individuals who were less than 18 years old. To do this, you would want to filter your dataset to only include data from these select individuals. Filtering can be done by row or by column. We'll discuss the syntax in R for doing both. Please note that the examples in this lesson and the organization for this lesson were adapted from Suzan Baert's wonderful dplyr tutorials. Links to the all four tutorials can be found in the "Additional Resources" section at the bottom of this lesson.

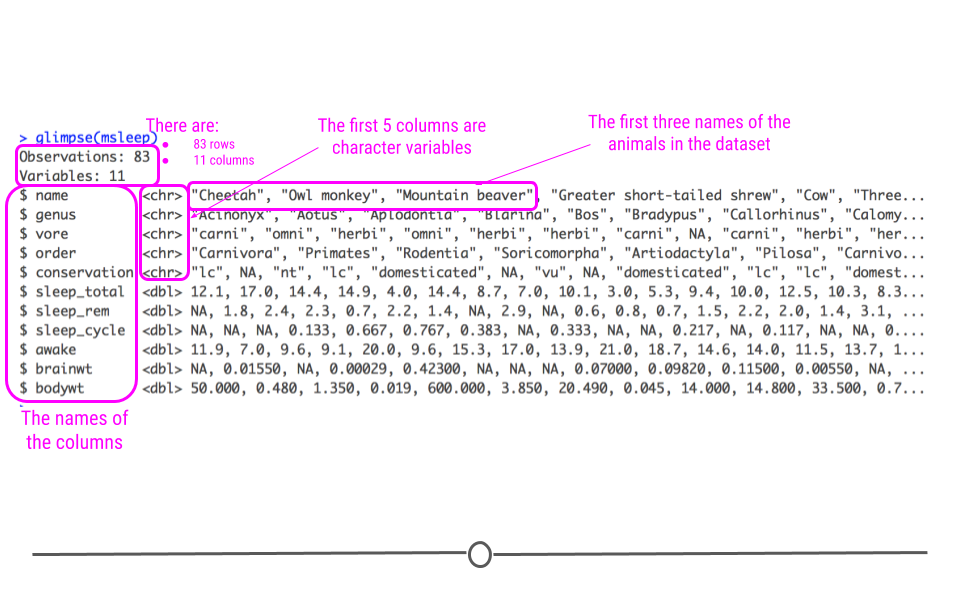

For the examples below, we'll be using a dataset from the ggplot2 package called msleep. (You'll learn more about this package in a later course on data visualization. For now, it's a core tidyverse package so it's loaded in along with the other tidyverse packages using library(tidyverse).) This dataset includes sleep times and weights from a number of different mammals. It has 83 rows, with each row including information about a different type of animal, and 11 variables. As each row is a different animal and each column includes information about that animal, this is a wide dataset.

To get an idea of what variables are included in this data frame, you can use glimpse(). This function summarizes how many rows there are (Observations) and how many columns there are (Variables). Additionally, it gives you a glimpse into the type of data contained in each column. Specifically, in this dataset, we know that the first column is name and that it contains a character vector (chr) and that the first three entries are "Cheetah," "Owl monkey," and "Mountain beaver." It works similarly to the base R summary() function.

## take a look at the data library(ggplot2) glimpse(msleep)

Glimpse of msleep dataset

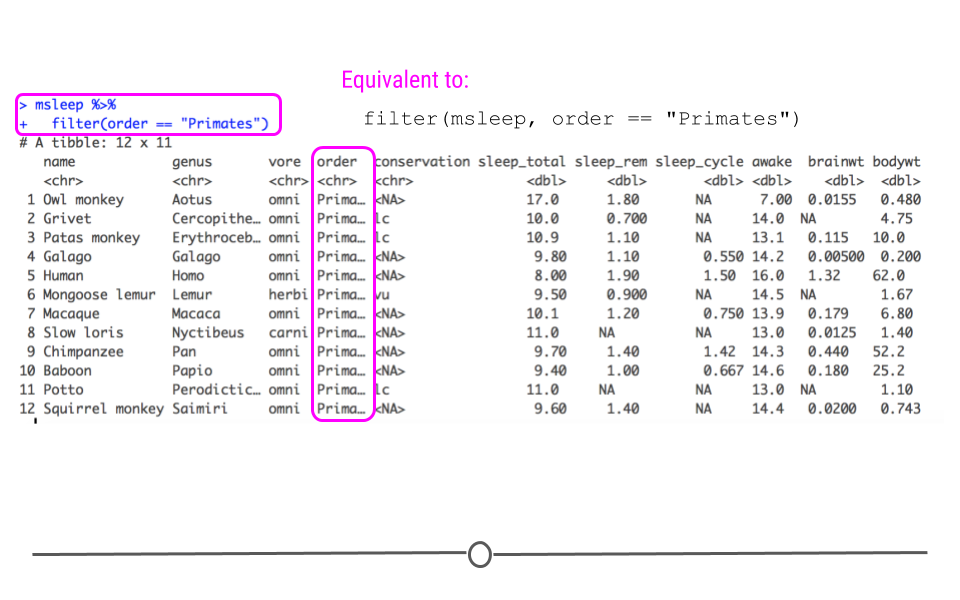

Filtering Rows

If you were only interested in learning more about the sleep times of "Primates," we could filter this dataset to include only data about those mammals that are also Primates. As we can see from glimpse(), this information is contained within the order variable. So to do this within R, we use the following syntax:

# filter to only include primates msleep %>% filter(order == "Primates") ## # A tibble: 12 × 11 ## name genus vore order conservation sleep_total sleep_rem sleep_cycle awake ## <chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> ## 1 Owl m… Aotus omni Prim… <NA> 17 1.8 NA 7 ## 2 Grivet Cerc… omni Prim… lc 10 0.7 NA 14 ## 3 Patas… Eryt… omni Prim… lc 10.9 1.1 NA 13.1 ## 4 Galago Gala… omni Prim… <NA> 9.8 1.1 0.55 14.2 ## 5 Human Homo omni Prim… <NA> 8 1.9 1.5 16 ## 6 Mongo… Lemur herbi Prim… vu 9.5 0.9 NA 14.5 ## 7 Macaq… Maca… omni Prim… <NA> 10.1 1.2 0.75 13.9 ## 8 Slow … Nyct… carni Prim… <NA> 11 NA NA 13 ## 9 Chimp… Pan omni Prim… <NA> 9.7 1.4 1.42 14.3 ## 10 Baboon Papio omni Prim… <NA> 9.4 1 0.667 14.6 ## 11 Potto Pero… omni Prim… lc 11 NA NA 13 ## 12 Squir… Saim… omni Prim… <NA> 9.6 1.4 NA 14.4 ## # … with 2 more variables: brainwt <dbl>, bodywt <dbl> Note that we are using the equality == comparison operator that you learned about in the previous course. Also note that we have used the pipe operator to feed the msleep data frame into the filter() function.

The above is shorthand for:

filter(msleep, order == "Primates") ## # A tibble: 12 × 11 ## name genus vore order conservation sleep_total sleep_rem sleep_cycle awake ## <chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> ## 1 Owl m… Aotus omni Prim… <NA> 17 1.8 NA 7 ## 2 Grivet Cerc… omni Prim… lc 10 0.7 NA 14 ## 3 Patas… Eryt… omni Prim… lc 10.9 1.1 NA 13.1 ## 4 Galago Gala… omni Prim… <NA> 9.8 1.1 0.55 14.2 ## 5 Human Homo omni Prim… <NA> 8 1.9 1.5 16 ## 6 Mongo… Lemur herbi Prim… vu 9.5 0.9 NA 14.5 ## 7 Macaq… Maca… omni Prim… <NA> 10.1 1.2 0.75 13.9 ## 8 Slow … Nyct… carni Prim… <NA> 11 NA NA 13 ## 9 Chimp… Pan omni Prim… <NA> 9.7 1.4 1.42 14.3 ## 10 Baboon Papio omni Prim… <NA> 9.4 1 0.667 14.6 ## 11 Potto Pero… omni Prim… lc 11 NA NA 13 ## 12 Squir… Saim… omni Prim… <NA> 9.6 1.4 NA 14.4 ## # … with 2 more variables: brainwt <dbl>, bodywt <dbl> The output is the same as above here, but the code is slightly less readable. This is why we use the pipe (%>%)!

Filtered to only include Primates

Now, we have a smaller dataset of only 12 mammals (as opposed to the original 83) and we can see that the order variable column only includes "Primates."

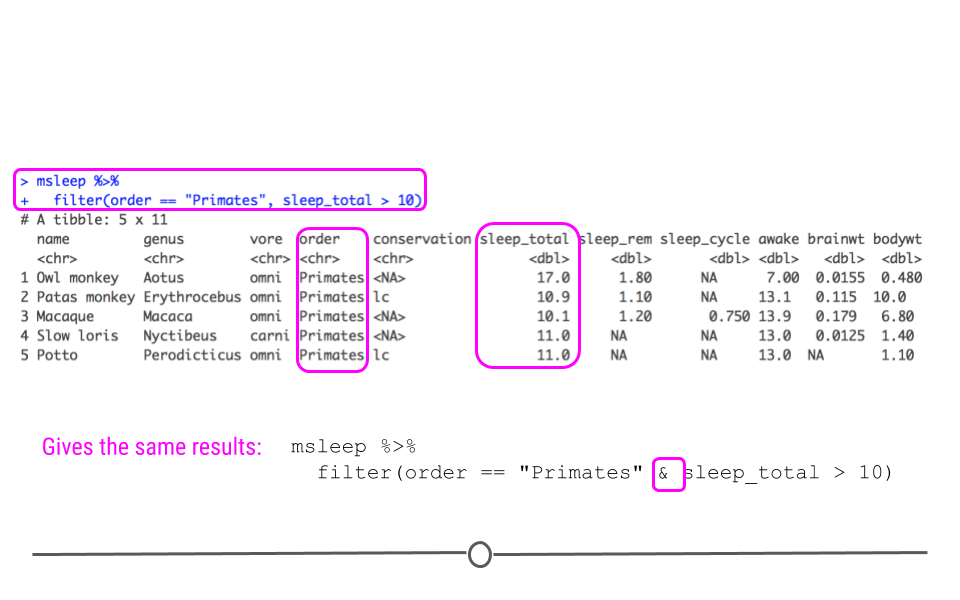

But, what if we were only interested in Primates who sleep more than 10 hours total per night? This information is in the sleep_total column. Fortunately, filter() also works on numeric variables. To accomplish this, you would use the following syntax, separating the multiple filters you want to apply with a comma:

msleep %>% filter(order == "Primates", sleep_total > 10) ## # A tibble: 5 × 11 ## name genus vore order conservation sleep_total sleep_rem sleep_cycle awake ## <chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> ## 1 Owl m… Aotus omni Prim… <NA> 17 1.8 NA 7 ## 2 Patas… Eryth… omni Prim… lc 10.9 1.1 NA 13.1 ## 3 Macaq… Macaca omni Prim… <NA> 10.1 1.2 0.75 13.9 ## 4 Slow … Nycti… carni Prim… <NA> 11 NA NA 13 ## 5 Potto Perod… omni Prim… lc 11 NA NA 13 ## # … with 2 more variables: brainwt <dbl>, bodywt <dbl> Note that we have used the "greater than" comparison operator with sleep_total.

Now, we have a dataset focused in on only 5 mammals, all of which are primates who sleep for more than 10 hours a night total.

Numerically filtered dataset

We can obtain the same result with the AND & logical operator instead of separating filtering conditions with a comma:

msleep %>% filter(order == "Primates" & sleep_total > 10) ## # A tibble: 5 × 11 ## name genus vore order conservation sleep_total sleep_rem sleep_cycle awake ## <chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> ## 1 Owl m… Aotus omni Prim… <NA> 17 1.8 NA 7 ## 2 Patas… Eryth… omni Prim… lc 10.9 1.1 NA 13.1 ## 3 Macaq… Macaca omni Prim… <NA> 10.1 1.2 0.75 13.9 ## 4 Slow … Nycti… carni Prim… <NA> 11 NA NA 13 ## 5 Potto Perod… omni Prim… lc 11 NA NA 13 ## # … with 2 more variables: brainwt <dbl>, bodywt <dbl> Note that the number of columns hasn't changed. All 11 variables are still shown in columns because the function filter() filters on rows, not columns.

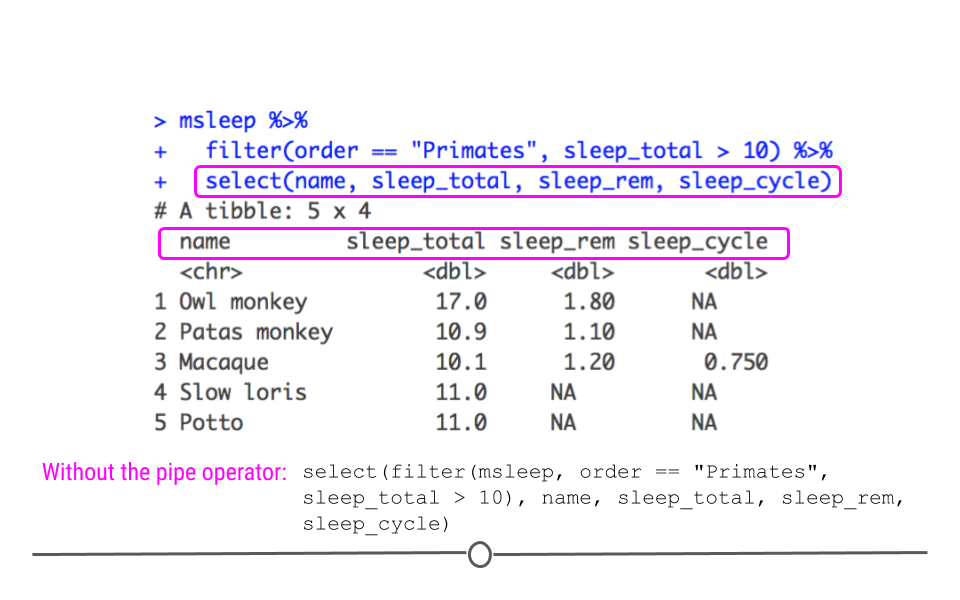

Selecting Columns

While filter() operates on rows, it is possible to filter your dataset to only include the columns you're interested in. To select columns so that your dataset only includes variables you're interested in, you will use select().

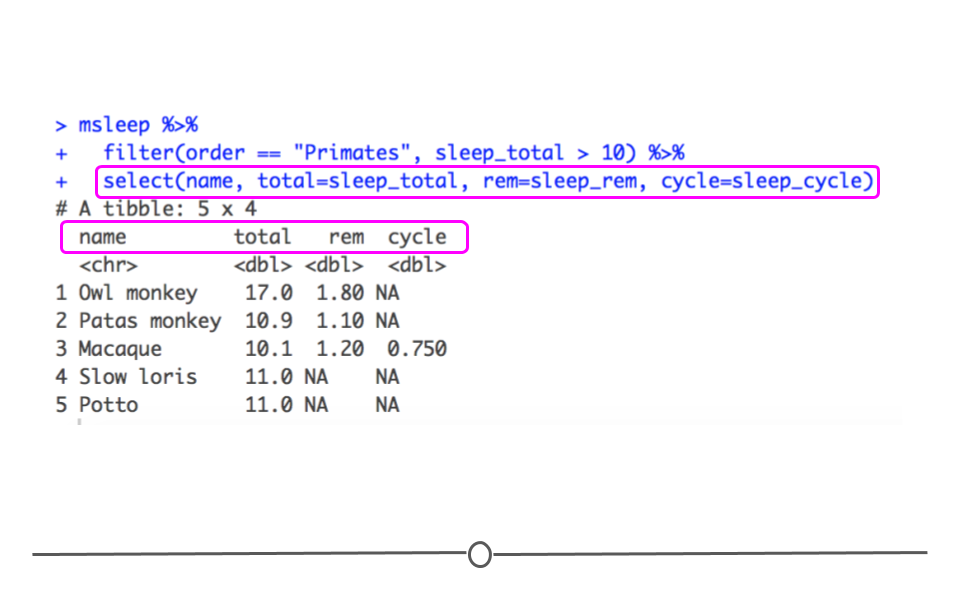

Let's start with the code we just wrote to only include primates who sleep a lot. What if we only want to include the first column (the name of the mammal) and the sleep information (included in the columns sleep_total, sleep_rem, and sleep_cycle)? We would do this by starting with the code we just used, adding another pipe, and using the function select(). Within select, we specify which columns we want in our output.

msleep %>% filter(order == "Primates", sleep_total > 10) %>% select(name, sleep_total, sleep_rem, sleep_cycle) ## # A tibble: 5 × 4 ## name sleep_total sleep_rem sleep_cycle ## <chr> <dbl> <dbl> <dbl> ## 1 Owl monkey 17 1.8 NA ## 2 Patas monkey 10.9 1.1 NA ## 3 Macaque 10.1 1.2 0.75 ## 4 Slow loris 11 NA NA ## 5 Potto 11 NA NA

Data with selected columns

Now, using select() we see that we still have the five rows we filtered to before, but we only have the four columns specified using select(). Here you can hopefully see the power of the pipe operator to chain together several commands in a row. Without the pipe operator, the full command would look like this:

select(filter(msleep, order == "Primates", sleep_total > 10), name, sleep_total, sleep_rem, sleep_cycle) Yuck. Definitely harder to read. We'll stick with the above approach!

Renaming Columns

select() can also be used to rename columns. To do so, you use the syntax: new_column_name = old_column_name within select. For example, to select the same columns and rename them total, rem and cycle, you would use the following syntax:

msleep %>% filter(order == "Primates", sleep_total > 10) %>% select(name, total = sleep_total, rem = sleep_rem, cycle = sleep_cycle) ## # A tibble: 5 × 4 ## name total rem cycle ## <chr> <dbl> <dbl> <dbl> ## 1 Owl monkey 17 1.8 NA ## 2 Patas monkey 10.9 1.1 NA ## 3 Macaque 10.1 1.2 0.75 ## 4 Slow loris 11 NA NA ## 5 Potto 11 NA NA

Data with renamed columns names with select()

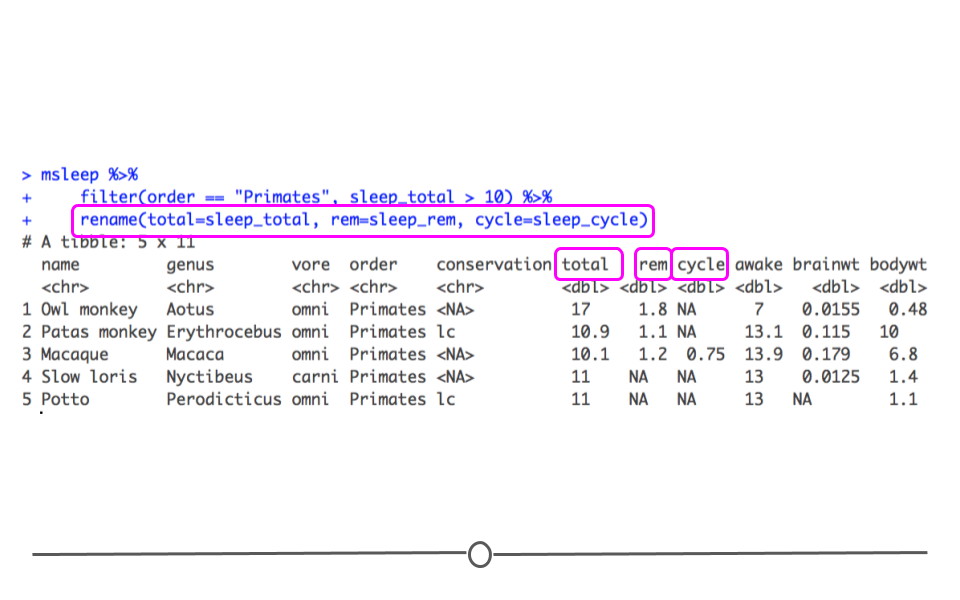

It's important to keep in mind that when using select() to rename columns, only the specified columns will be included and renamed in the output. If you, instead, want to change the names of a few columns but return all columns in your output, you'll want to use rename(). For example, the following, returns a data frame with all 11 columns, where the column names for three columns specified within rename() function have been renamed.

msleep %>% filter(order == "Primates", sleep_total > 10) %>% rename(total = sleep_total, rem = sleep_rem, cycle = sleep_cycle) ## # A tibble: 5 × 11 ## name genus vore order conservation total rem cycle awake brainwt bodywt ## <chr> <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 Owl mo… Aotus omni Prim… <NA> 17 1.8 NA 7 0.0155 0.48 ## 2 Patas … Eryth… omni Prim… lc 10.9 1.1 NA 13.1 0.115 10 ## 3 Macaque Macaca omni Prim… <NA> 10.1 1.2 0.75 13.9 0.179 6.8 ## 4 Slow l… Nycti… carni Prim… <NA> 11 NA NA 13 0.0125 1.4 ## 5 Potto Perod… omni Prim… lc 11 NA NA 13 NA 1.1

Data with renamed columns names using rename()

Reordering

In addition to filtering rows and columns, often, you'll want the data arranged in a particular order. It may order the columns in a logical way, or it could be to sort the data so that the data are sorted by value, with those having the smallest value in the first row and the largest value in the last row. All of this can be achieved with a few simple functions.

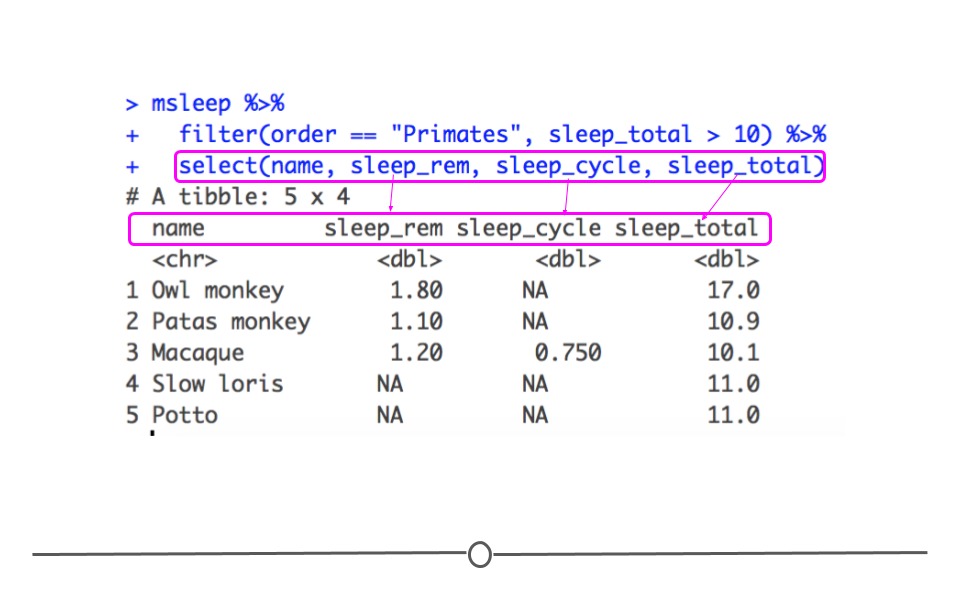

Reordering Columns

The select() function is powerful. Not only will it filter and rename columns, but it can also be used to reorder your columns. Using our example from above, if you wanted sleep_rem to be the first sleep column and sleep_total to be the last column, all you have to do is reorder them within select(). The output from select() would then be reordered to match the order specified within select().

msleep %>% filter(order == "Primates", sleep_total > 10) %>% select(name, sleep_rem, sleep_cycle, sleep_total) ## # A tibble: 5 × 4 ## name sleep_rem sleep_cycle sleep_total ## <chr> <dbl> <dbl> <dbl> ## 1 Owl monkey 1.8 NA 17 ## 2 Patas monkey 1.1 NA 10.9 ## 3 Macaque 1.2 0.75 10.1 ## 4 Slow loris NA NA 11 ## 5 Potto NA NA 11 Here we see that sleep_rem name is displayed first followed by sleep_rem, sleep_cycle, and sleep_total, just as it was specified within select().

Data with reordered columns names

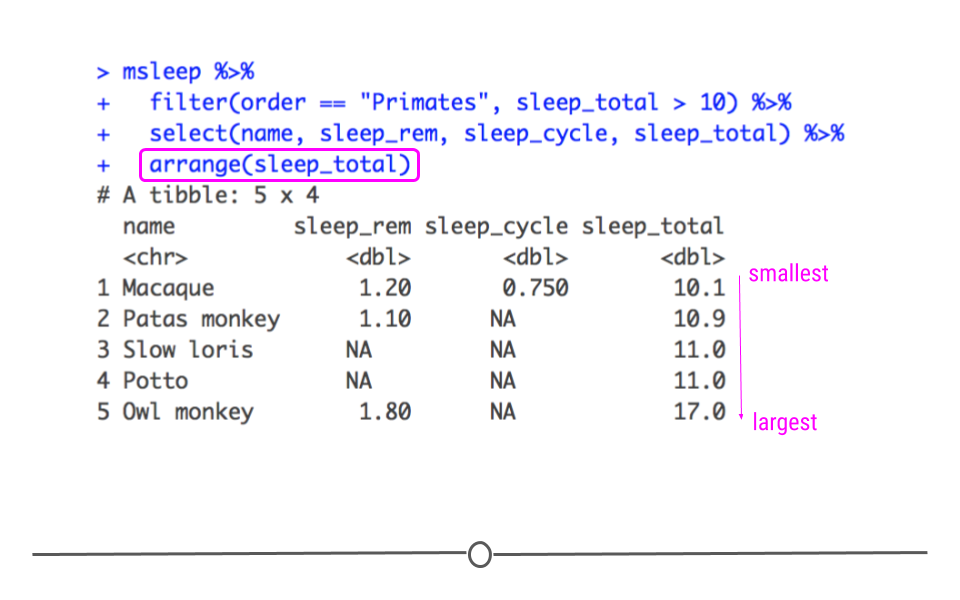

Reordering Rows

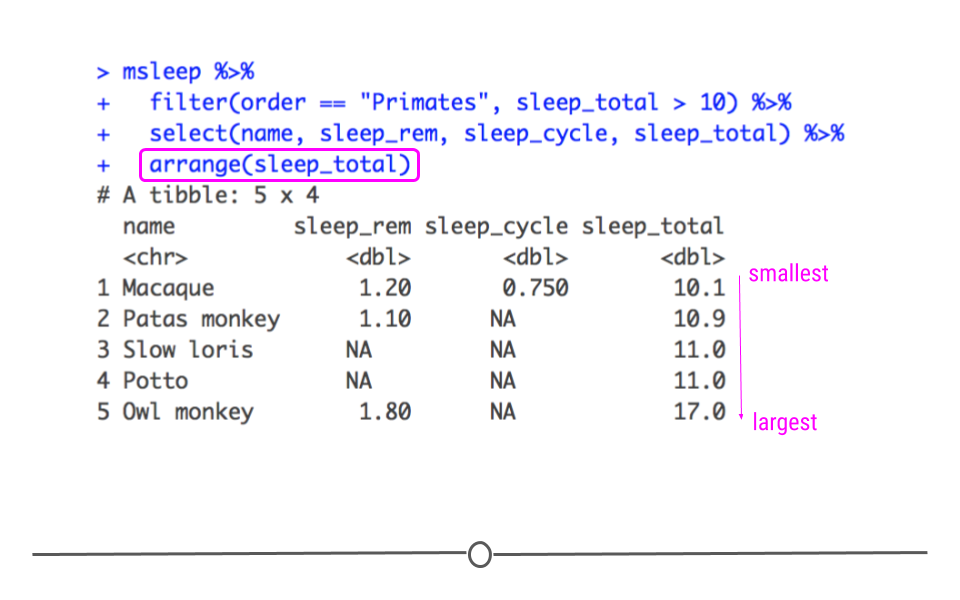

Rows can also be reordered. To reorder a variable in ascending order (from smallest to largest), you'll want to use arrange(). Continuing on from our example above, to now sort our rows by the amount of total sleep each mammal gets, we would use the following syntax:

msleep %>% filter(order == "Primates", sleep_total > 10) %>% select(name, sleep_rem, sleep_cycle, sleep_total) %>% arrange(sleep_total) ## # A tibble: 5 × 4 ## name sleep_rem sleep_cycle sleep_total ## <chr> <dbl> <dbl> <dbl> ## 1 Macaque 1.2 0.75 10.1 ## 2 Patas monkey 1.1 NA 10.9 ## 3 Slow loris NA NA 11 ## 4 Potto NA NA 11 ## 5 Owl monkey 1.8 NA 17

Data arranged by total sleep in ascending order

While arrange sorts variables in ascending order, it's also possible to sort in descending (largest to smallest) order. To do this you just use desc() with the following syntax:

msleep %>% filter(order == "Primates", sleep_total > 10) %>% select(name, sleep_rem, sleep_cycle, sleep_total) %>% arrange(desc(sleep_total)) ## # A tibble: 5 × 4 ## name sleep_rem sleep_cycle sleep_total ## <chr> <dbl> <dbl> <dbl> ## 1 Owl monkey 1.8 NA 17 ## 2 Slow loris NA NA 11 ## 3 Potto NA NA 11 ## 4 Patas monkey 1.1 NA 10.9 ## 5 Macaque 1.2 0.75 10.1 By putting sleep_total within desc(), arrange() will now sort your data from the primates with the longest total sleep to the shortest.

Data arranged by total sleep in descending order

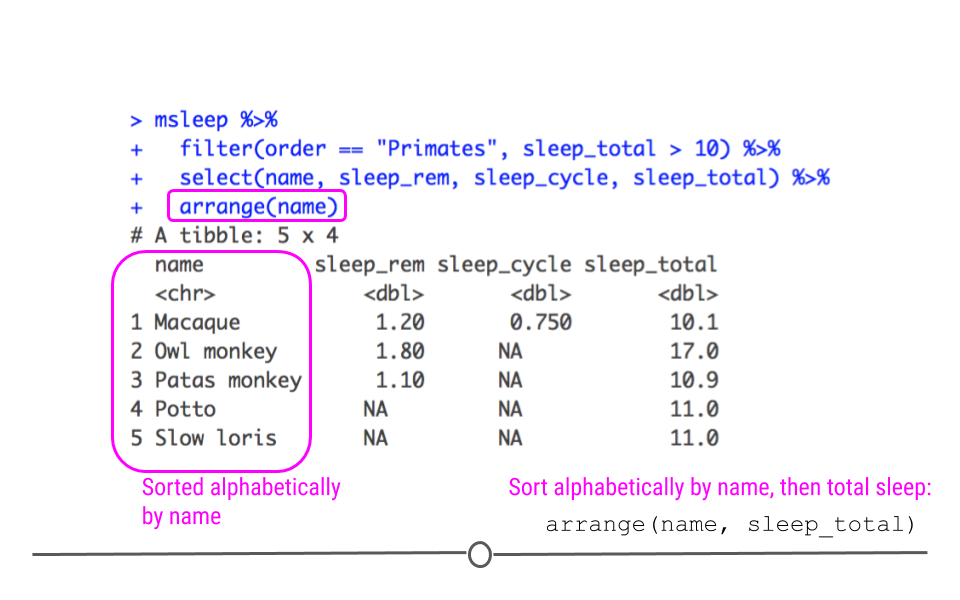

arrange() can also be used to order non-numeric variables. For example, arrange() will sort character vectors alphabetically.

msleep %>% filter(order == "Primates", sleep_total > 10) %>% select(name, sleep_rem, sleep_cycle, sleep_total) %>% arrange(name) ## # A tibble: 5 × 4 ## name sleep_rem sleep_cycle sleep_total ## <chr> <dbl> <dbl> <dbl> ## 1 Macaque 1.2 0.75 10.1 ## 2 Owl monkey 1.8 NA 17 ## 3 Patas monkey 1.1 NA 10.9 ## 4 Potto NA NA 11 ## 5 Slow loris NA NA 11

Data arranged alphabetically by name

If you would like to reorder rows based on information in multiple columns, you can specify them separated by commas. This is useful if you have repeated labels in one column and want to sort within a category based on information in another column. In the example here, if there were repeated primates, this would sort the repeats based on their total sleep.

msleep %>% filter(order == "Primates", sleep_total > 10) %>% select(name, sleep_rem, sleep_cycle, sleep_total) %>% arrange(name, sleep_total) ## # A tibble: 5 × 4 ## name sleep_rem sleep_cycle sleep_total ## <chr> <dbl> <dbl> <dbl> ## 1 Macaque 1.2 0.75 10.1 ## 2 Owl monkey 1.8 NA 17 ## 3 Patas monkey 1.1 NA 10.9 ## 4 Potto NA NA 11 ## 5 Slow loris NA NA 11 Creating New Columns

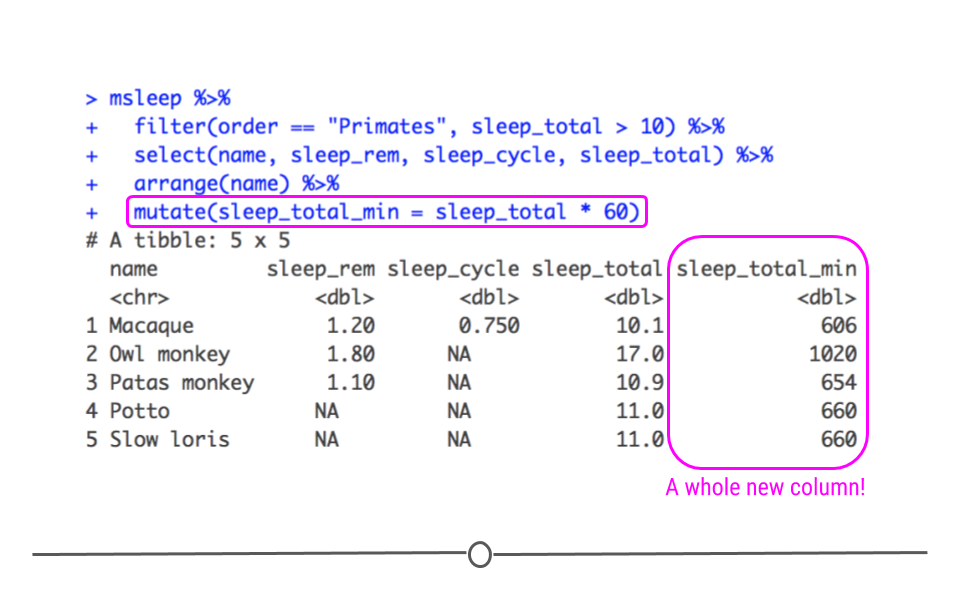

You will often find when working with data that you need an additional column. For example, if you had two datasets you wanted to combine, you may want to make a new column in each dataset called dataset. In one dataset you may put datasetA in each row. In the second dataset, you could put datasetB. This way, once you combined the data, you would be able to keep track of which dataset each row came from originally. More often, however, you'll likely want to create a new column that calculates a new variable based on information in a column you already have. For example, in our mammal sleep dataset, sleep_total is in hours. What if you wanted to have that information in minutes? You could create a new column with this very information! The function mutate() was made for all of these new-column-creating situations. This function has a lot of capabilities. We'll cover the basics here.

Returning to our msleep dataset, after filtering and re-ordering, we can create a new column with mutate(). Within mutate(), we will calculate the number of minutes each mammal sleeps by multiplying the number of hours each animal sleeps by 60 minutes.

msleep %>% filter(order == "Primates", sleep_total > 10) %>% select(name, sleep_rem, sleep_cycle, sleep_total) %>% arrange(name) %>% mutate(sleep_total_min = sleep_total * 60) ## # A tibble: 5 × 5 ## name sleep_rem sleep_cycle sleep_total sleep_total_min ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 Macaque 1.2 0.75 10.1 606 ## 2 Owl monkey 1.8 NA 17 1020 ## 3 Patas monkey 1.1 NA 10.9 654 ## 4 Potto NA NA 11 660 ## 5 Slow loris NA NA 11 660

Mutate to add new column to data

Separating Columns

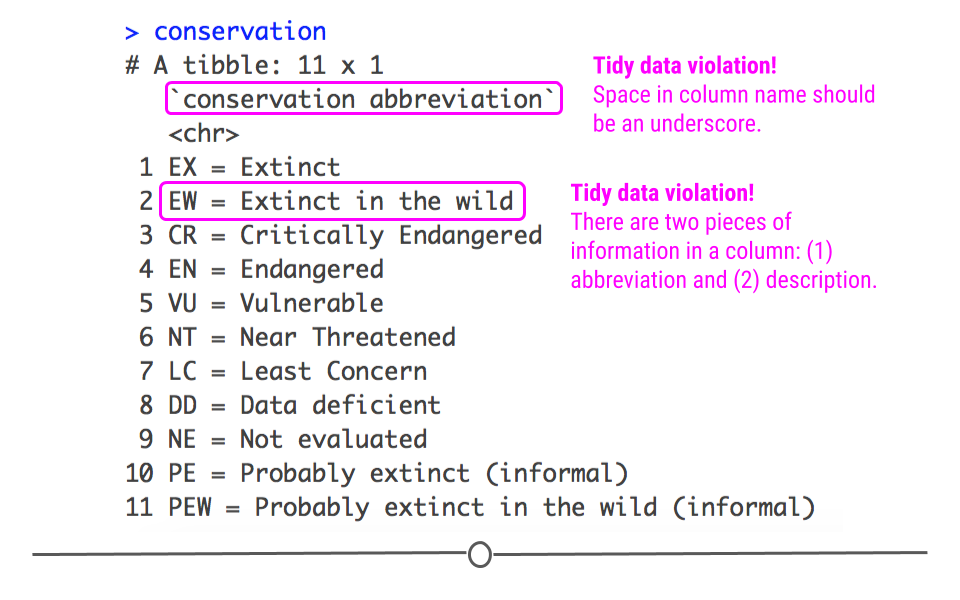

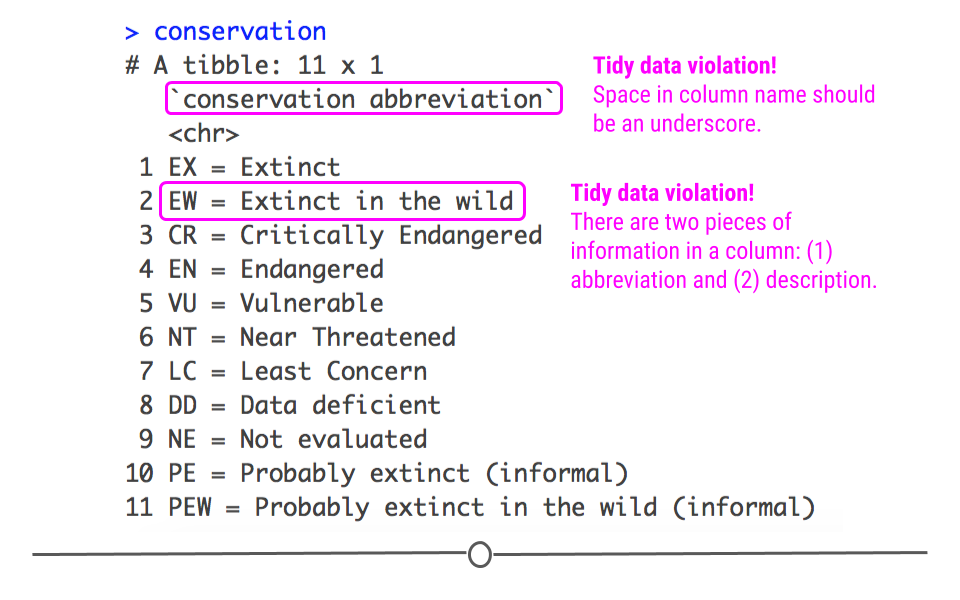

Sometimes multiple pieces of information are merged within a single column even though it would be more useful during analysis to have those pieces of information in separate columns. To demonstrate, we'll now move from the msleep dataset to talking about another dataset that includes information about conservation abbreviations in a single column.

To read this file into R, we'll use the readr package.

## download file conservation <- read_csv("https://raw.githubusercontent.com/suzanbaert/Dplyr_Tutorials/master/conservation_explanation.csv") ## take a look at this file conservation ## # A tibble: 11 × 1 ## `conservation abbreviation` ## <chr> ## 1 EX = Extinct ## 2 EW = Extinct in the wild ## 3 CR = Critically Endangered ## 4 EN = Endangered ## 5 VU = Vulnerable ## 6 NT = Near Threatened ## 7 LC = Least Concern ## 8 DD = Data deficient ## 9 NE = Not evaluated ## 10 PE = Probably extinct (informal) ## 11 PEW = Probably extinct in the wild (informal)

Conservation dataset

In this dataset, we see that there is a single column that includes both the abbreviation for the conservation term as well as what that abbreviation means. Recall that this violates one of the tidy data principles covered in the first lesson: Put just one thing in a cell. To work with these data, you could imagine that you may want these two pieces of information (the abbreviation and the description) in two different columns. To accomplish this in R, you'll want to use separate() from tidyr.

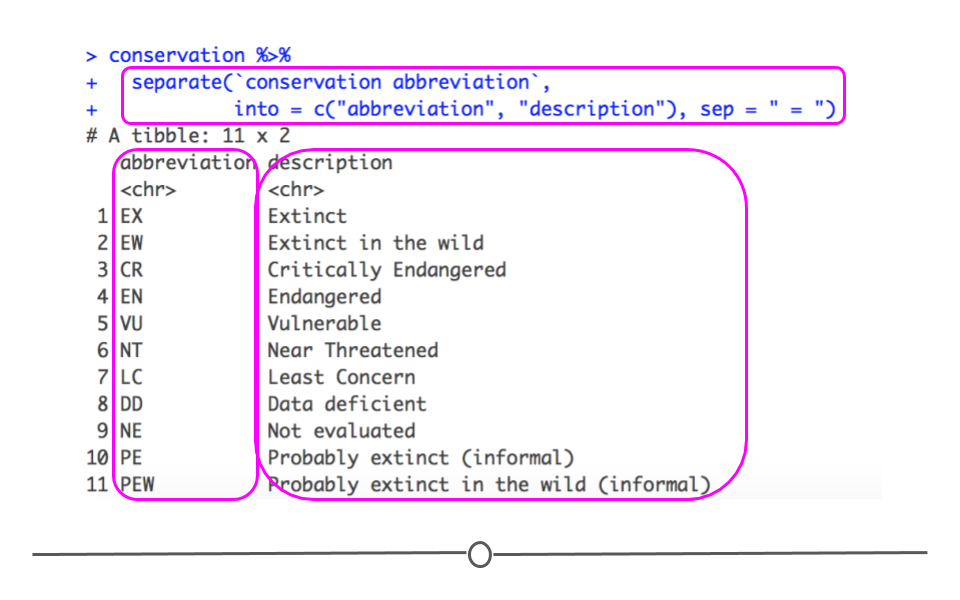

The separate() function requires the name of the existing column that you want to separate (conservation abbreviation), the desired column names of the resulting separated columns (into = c("abbreviation", "description")), and the characters that currently separate the pieces of information (sep = " = "). We have to put conservation abbreviation in back ticks in the code below because the column name contains a space. Without the back ticks, R would think that conservation and abbreviation were two separate things. This is another violation of tidy data! Variable names should have underscores, not spaces!

conservation %>% separate(` conservation abbreviation `, into = c("abbreviation", "description"), sep = " = ") ## # A tibble: 11 × 2 ## abbreviation description ## <chr> <chr> ## 1 EX Extinct ## 2 EW Extinct in the wild ## 3 CR Critically Endangered ## 4 EN Endangered ## 5 VU Vulnerable ## 6 NT Near Threatened ## 7 LC Least Concern ## 8 DD Data deficient ## 9 NE Not evaluated ## 10 PE Probably extinct (informal) ## 11 PEW Probably extinct in the wild (informal) The output of this code shows that we now have two separate columns with the information in the original column separated out into abbreviation and description.

Output of separate()

Merging Columns

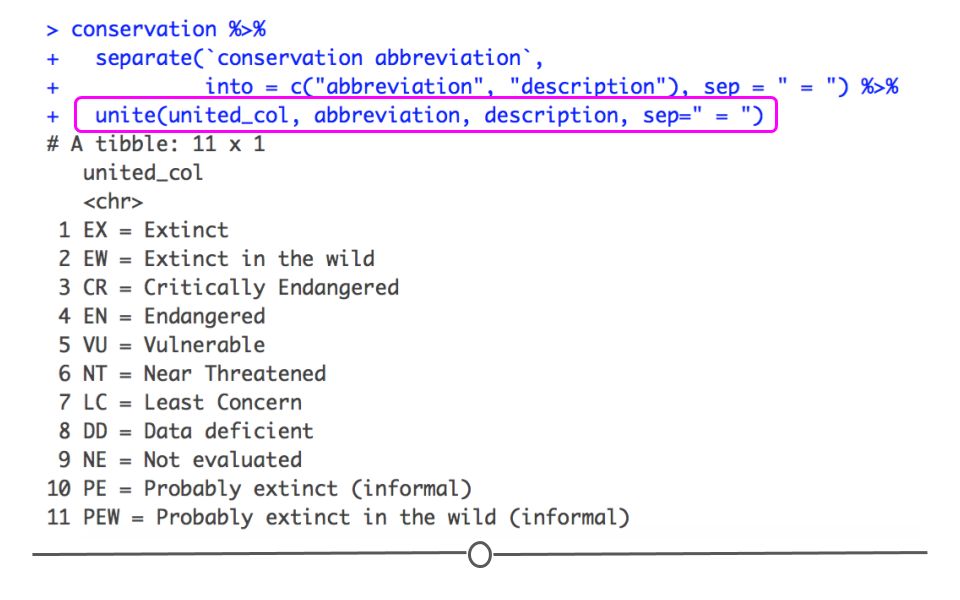

The opposite of separate() is unite(). So, if you have information in two or more different columns but wish it were in one single column, you'll want to use unite(). Using the code forming the two separate columns above, we can then add on an extra line of unite() code to re-join these separate columns, returning what we started with.

conservation %>% separate(` conservation abbreviation `, into = c("abbreviation", "description"), sep = " = ") %>% unite(united_col, abbreviation, description, sep = " = ") ## # A tibble: 11 × 1 ## united_col ## <chr> ## 1 EX = Extinct ## 2 EW = Extinct in the wild ## 3 CR = Critically Endangered ## 4 EN = Endangered ## 5 VU = Vulnerable ## 6 NT = Near Threatened ## 7 LC = Least Concern ## 8 DD = Data deficient ## 9 NE = Not evaluated ## 10 PE = Probably extinct (informal) ## 11 PEW = Probably extinct in the wild (informal)

Output of unite()

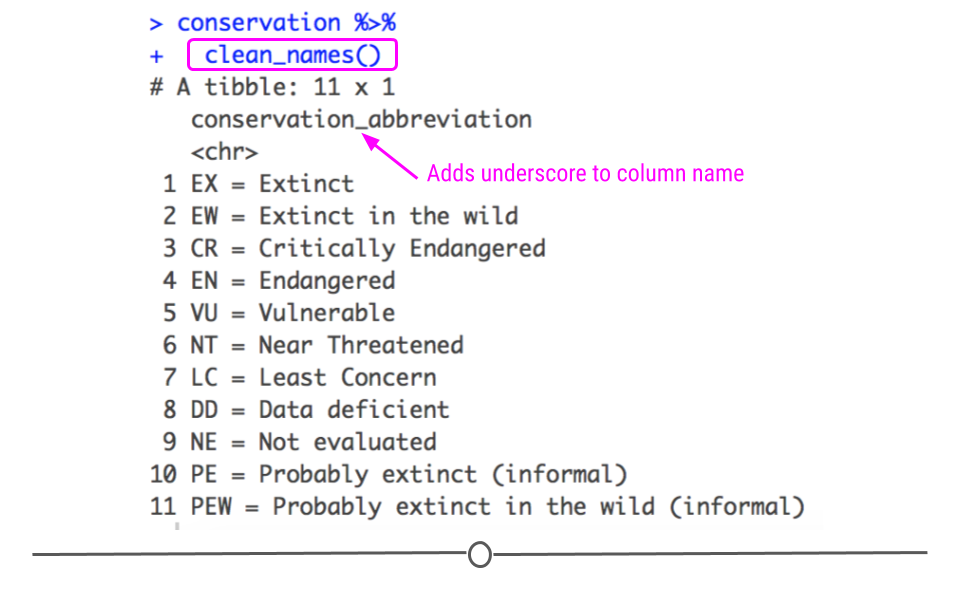

Cleaning Column Names

While maybe not quite as important as some of the other functions mentioned in this lesson, a function that will likely prove very helpful as you start analyzing lots of different datasets is clean_names() from the janitor package. This function takes the existing column names of your dataset, converts them all to lowercase letters and numbers, and separates all words using the underscore character. For example, there is a space in the column name for conservation. The clean_names()function will convert conservation abbreviation to conservation_abbreviation. These cleaned up column names are a lot easier to work with when you have large datasets.

So remember this is what the data first looked like:

Conservation dataset

And now with "clean names" it looks like this:

conservation %>% clean_names() ## # A tibble: 11 × 1 ## conservation_abbreviation ## <chr> ## 1 EX = Extinct ## 2 EW = Extinct in the wild ## 3 CR = Critically Endangered ## 4 EN = Endangered ## 5 VU = Vulnerable ## 6 NT = Near Threatened ## 7 LC = Least Concern ## 8 DD = Data deficient ## 9 NE = Not evaluated ## 10 PE = Probably extinct (informal) ## 11 PEW = Probably extinct in the wild (informal)

clean_names() output

Combining Data Across Data Frames

There is often information stored in two separate data frames that you'll want in a single data frame. There are many different ways to join separate data frames. They are discussed in more detail in this tutorial from Jenny Bryan. Here, we'll demonstrate how the left_join() function works, as this is used frequently.

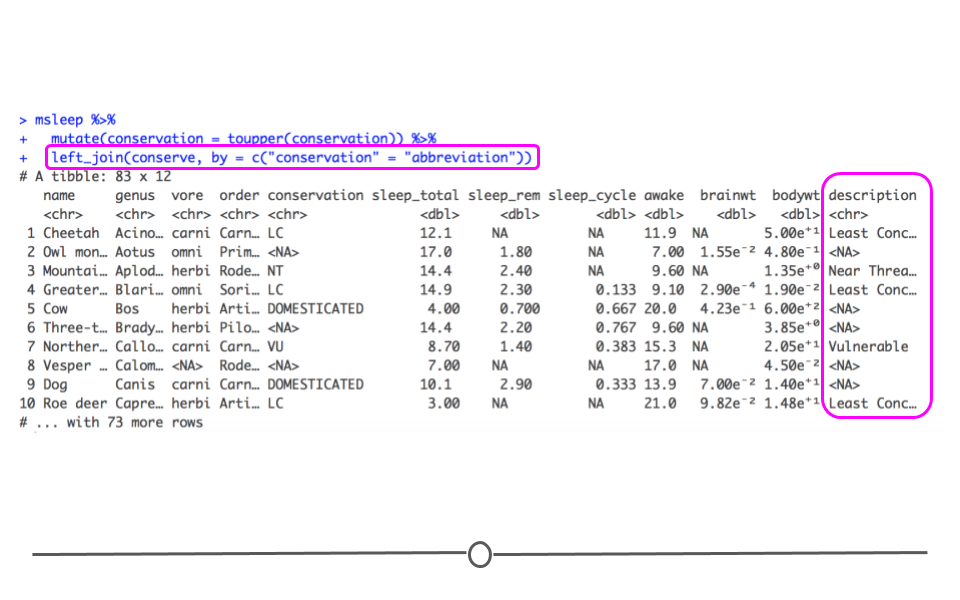

Let's try to combine the information from the two different datasets we've used in this lesson. We have msleep and conservation. The msleepdataset contains a column called conservation. This column includes lowercase abbreviations that overlap with the uppercase abbreviations in the abbreviation column in the conservation dataset.

To handle the fact that in one dataset the abbreviations are lowercase and the other they are uppercase, we'll use mutate() to take all the lowercase abbreviations to uppercase abbreviations using the function toupper().

We'll then use left_join() which takes all of the rows in the first dataset mentioned (msleep, below) and incorporates information from the second dataset mentioned (conserve, below), when information in the second dataset is available. The by = argument states what columns to join by in the first ("conservation") and second ("abbreviation") datasets. This join adds the description column from the conserve dataset onto the original dataset (msleep). Note that if there is no information in the second dataset that matches with the information in the first dataset, left_join() will add NA. Specifically, for rows where conservation is "DOMESTICATED" below, the description column will have NA because "DOMESTICATED"" is not an abbreviation in the conserve dataset.

## take conservation dataset and separate information ## into two columns ## call that new object `conserve` conserve <- conservation %>% separate(` conservation abbreviation `, into = c("abbreviation", "description"), sep = " = ") ## now lets join the two datasets together msleep %>% mutate(conservation = toupper(conservation)) %>% left_join(conserve, by = c("conservation" = "abbreviation"))

Data resulting from left_join

It's important to note that there are many other ways to join data, which we covered earlier in a previous course and are covered in more detail on this dplyr join cheatsheet from Jenny Bryan. For now, it's important to know that joining datasets is done easily in R using tools in dplyr. As you join data frames in your own work, it's a good idea to refer back to this cheatsheet for assistance.

Grouping Data

Often, data scientists will want to summarize information in their dataset. You may want to know how many people are in a dataset. However, more often, you'll want to know how many people there are within a group in your dataset. For example, you may want to know how many males and how many females there are. To do this, grouping your data is necessary. Rather than looking at the total number of individuals, to accomplish this, you first have to group the data by the gender of the individuals. Then, you count within those groups. Grouping by variables within dplyr is straightforward.

group_by()

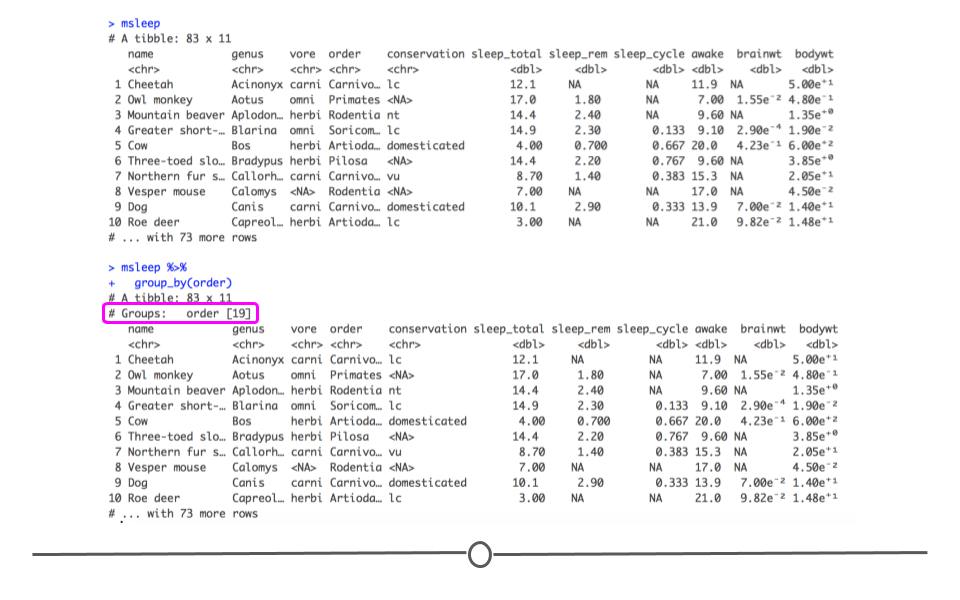

There is an incredibly helpful function within dplyr called group_by(). The group_by() function groups a dataset by one or more variables. On its own, it does not appear to change the dataset very much. The difference between the two outputs below is subtle:

msleep msleep %>% group_by(order)

group_by() output

In fact, the only aspect of the output that is different is that the number of different orders is now printed on your screen. However, in the next section, you'll see that the output from any further functions you carry out at this point will differ between the two datasets.

Summarizing Data

Throughout data cleaning and analysis it will be important to summarize information in your dataset. This may be for a formal report or for checking the results of a data tidying operation.

summarize()

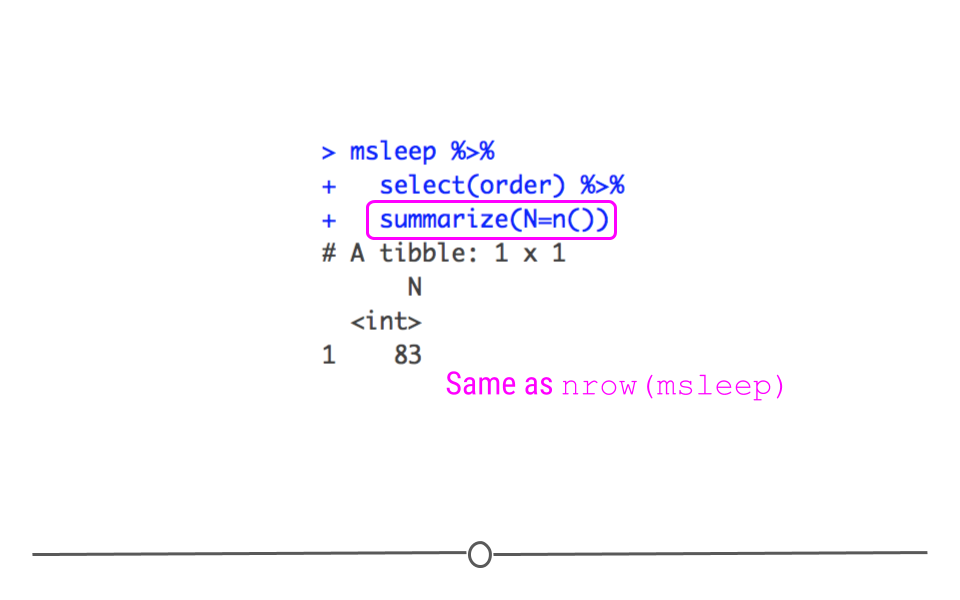

Continuing on from the previous examples, if you wanted to figure out how many samples are present in your dataset, you could use the summarize() function.

msleep %>% # here we select the column called genus, any column would work select(genus) %>% summarize(N= n()) ## # A tibble: 1 × 1 ## N ## <int> ## 1 83 msleep %>% # here we select the column called vore, any column would work select(vore) %>% summarize(N= n()) ## # A tibble: 1 × 1 ## N ## <int> ## 1 83 This provides a summary of the data with the new column name we specified above (N) and the number of samples in the dataset. Note that we could also obtain the same information by directly obtaining the number of rows in the data frame with nrow(msleep).

Summarize with n()

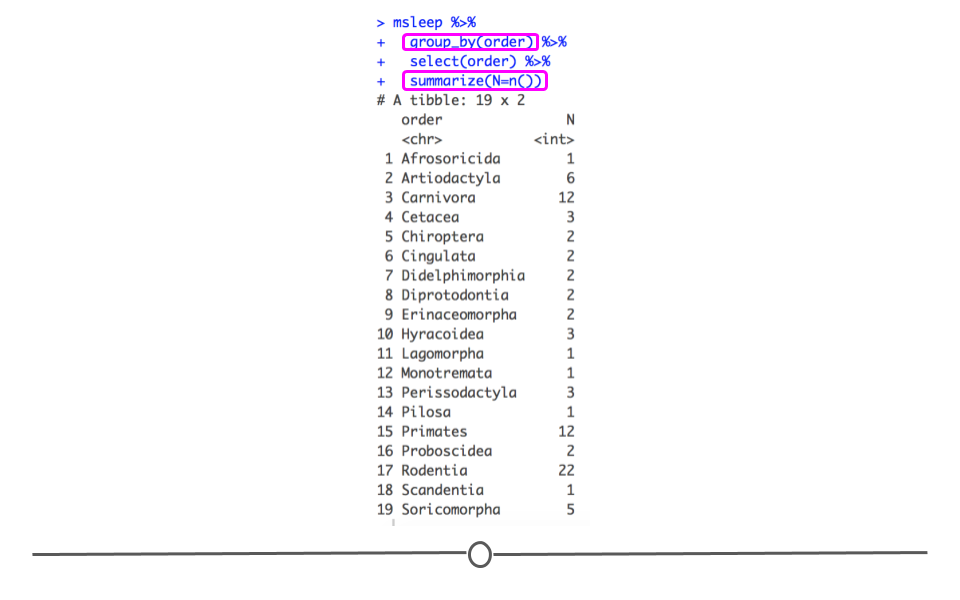

However, if you wanted to count how many of each different order of mammal you had. You would first group_by(order) and then use summarize(). This will summarize within group.

msleep %>% group_by(order) %>% select(order) %>% summarize(N= n()) ## # A tibble: 19 × 2 ## order N ## <chr> <int> ## 1 Afrosoricida 1 ## 2 Artiodactyla 6 ## 3 Carnivora 12 ## 4 Cetacea 3 ## 5 Chiroptera 2 ## 6 Cingulata 2 ## 7 Didelphimorphia 2 ## 8 Diprotodontia 2 ## 9 Erinaceomorpha 2 ## 10 Hyracoidea 3 ## 11 Lagomorpha 1 ## 12 Monotremata 1 ## 13 Perissodactyla 3 ## 14 Pilosa 1 ## 15 Primates 12 ## 16 Proboscidea 2 ## 17 Rodentia 22 ## 18 Scandentia 1 ## 19 Soricomorpha 5 The output from this, like above, includes the column name we specified in summarize (N). However, it includes the number of samples in the group_by variable we specified (order).

group_by() and summarize with n()

There are other ways in which the data can be summarized using summarize(). In addition to using n() to count the number of samples within a group, you can also summarize using other helpful functions within R, such as mean(), median(), min(), and max().

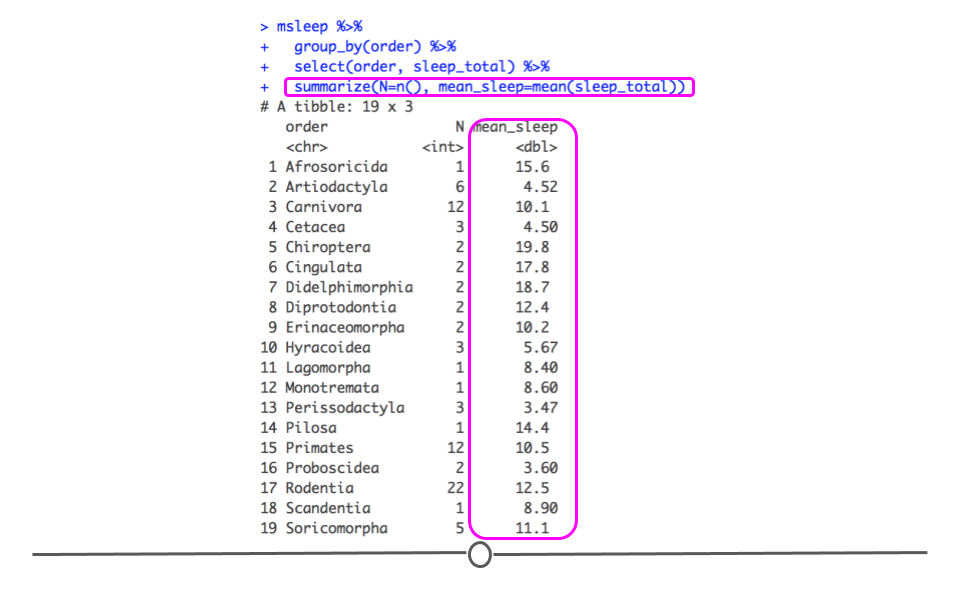

For example, if we wanted to calculate the average (mean) total sleep each order of mammal got, we could use the following syntax:

msleep %>% group_by(order) %>% select(order, sleep_total) %>% summarize(N= n(), mean_sleep= mean(sleep_total)) ## # A tibble: 19 × 3 ## order N mean_sleep ## <chr> <int> <dbl> ## 1 Afrosoricida 1 15.6 ## 2 Artiodactyla 6 4.52 ## 3 Carnivora 12 10.1 ## 4 Cetacea 3 4.5 ## 5 Chiroptera 2 19.8 ## 6 Cingulata 2 17.8 ## 7 Didelphimorphia 2 18.7 ## 8 Diprotodontia 2 12.4 ## 9 Erinaceomorpha 2 10.2 ## 10 Hyracoidea 3 5.67 ## 11 Lagomorpha 1 8.4 ## 12 Monotremata 1 8.6 ## 13 Perissodactyla 3 3.47 ## 14 Pilosa 1 14.4 ## 15 Primates 12 10.5 ## 16 Proboscidea 2 3.6 ## 17 Rodentia 22 12.5 ## 18 Scandentia 1 8.9 ## 19 Soricomorpha 5 11.1

summarize using mean()

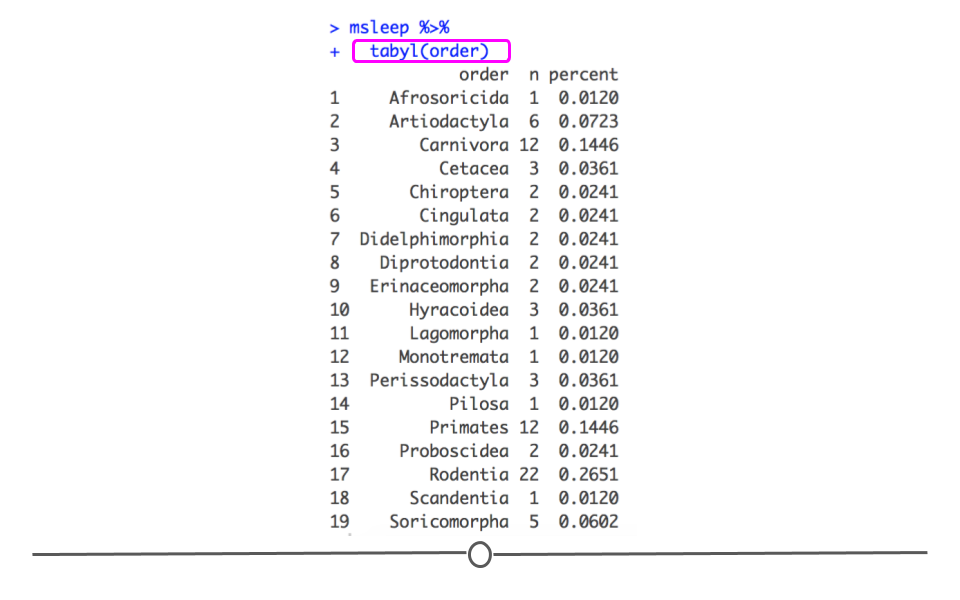

tabyl()

In addition to using summarize() from dplyr, the tabyl() function from the janitor package can be incredibly helpful for summarizing categorical variables quickly and discerning the output at a glance. It is similar to the table() function from base R, but is explicit about missing data, rather than ignoring missing values by default.

Again returning to our msleep dataset, if we wanted to get a summary of how many samples are in each order category and what percent of the data fall into each category we could call tabyl on that variable. For example, if we use the following syntax, we easily get a quick snapshot of this variable.

## order n percent ## Afrosoricida 1 0.01204819 ## Artiodactyla 6 0.07228916 ## Carnivora 12 0.14457831 ## Cetacea 3 0.03614458 ## Chiroptera 2 0.02409639 ## Cingulata 2 0.02409639 ## Didelphimorphia 2 0.02409639 ## Diprotodontia 2 0.02409639 ## Erinaceomorpha 2 0.02409639 ## Hyracoidea 3 0.03614458 ## Lagomorpha 1 0.01204819 ## Monotremata 1 0.01204819 ## Perissodactyla 3 0.03614458 ## Pilosa 1 0.01204819 ## Primates 12 0.14457831 ## Proboscidea 2 0.02409639 ## Rodentia 22 0.26506024 ## Scandentia 1 0.01204819 ## Soricomorpha 5 0.06024096

summarize using tabyl() from janitor

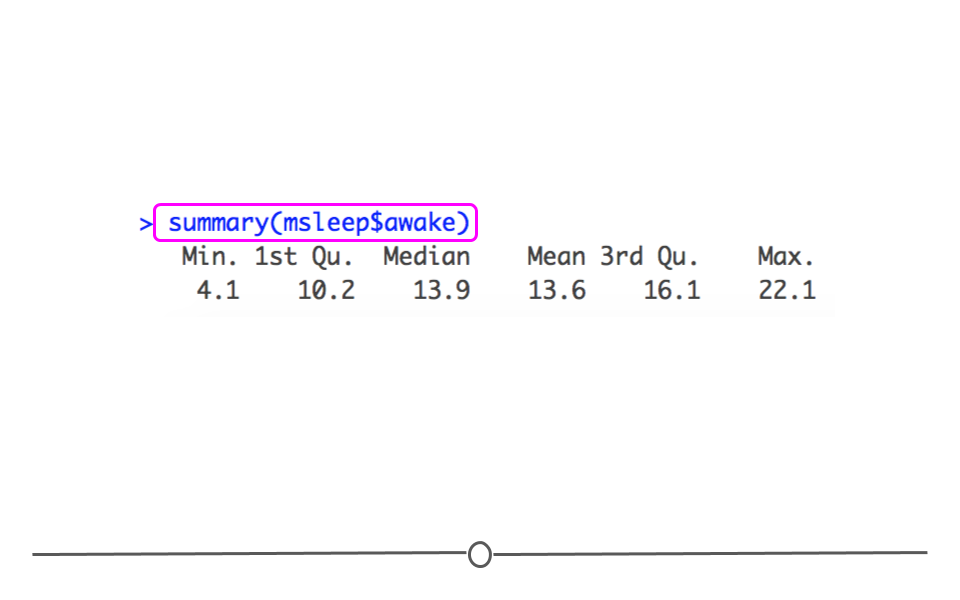

Note, that tabyl assumes categorical variables. If you want to summarize numeric variables summary() works well. For example, this code will summarize the values in msleep$awake for you.

## Min. 1st Qu. Median Mean 3rd Qu. Max. ## 4.10 10.25 13.90 13.57 16.15 22.10

summarize numeric variables

tally()

We can use the tally function to get the total number of samples in a tibble or the total number of rows very simply.

## # A tibble: 1 × 1 ## n ## <int> ## 1 83 We can see that this is very similar to our previous use of summarize.

msleep %>% # here we select the column called genus, any column would work select(genus) %>% summarize(N= n()) ## # A tibble: 1 × 1 ## N ## <int> ## 1 83 We can also use this function to get a sum of the values of a column (if the values are numeric).

msleep %>% tally(sleep_total) ## # A tibble: 1 × 1 ## n ## <dbl> ## 1 866 Thus overall, all the animals in the dataset sleep 866 hours in total.

This is the equivalent to using the sum() function with the summarize() function.

msleep %>% summarize(sum_sleep_total = sum(sleep_total)) ## # A tibble: 1 × 1 ## sum_sleep_total ## <dbl> ## 1 866 We could also use the pull() function of the dplyr package, to get the sum of just the sleep_total column, as the pull() function extracts or "pulls" the values of a column.

msleep %>% pull(sleep_total)%>% sum() ## [1] 866 add_tally()

We can quickly add our tally values to our tibble using add_tally().

msleep %>% add_tally() %>% glimpse() ## Rows: 83 ## Columns: 12 ## $ name <chr> "Cheetah", "Owl monkey", "Mountain beaver", "Greater shor… ## $ genus <chr> "Acinonyx", "Aotus", "Aplodontia", "Blarina", "Bos", "Bra… ## $ vore <chr> "carni", "omni", "herbi", "omni", "herbi", "herbi", "carn… ## $ order <chr> "Carnivora", "Primates", "Rodentia", "Soricomorpha", "Art… ## $ conservation <chr> "lc", NA, "nt", "lc", "domesticated", NA, "vu", NA, "dome… ## $ sleep_total <dbl> 12.1, 17.0, 14.4, 14.9, 4.0, 14.4, 8.7, 7.0, 10.1, 3.0, 5… ## $ sleep_rem <dbl> NA, 1.8, 2.4, 2.3, 0.7, 2.2, 1.4, NA, 2.9, NA, 0.6, 0.8, … ## $ sleep_cycle <dbl> NA, NA, NA, 0.1333333, 0.6666667, 0.7666667, 0.3833333, N… ## $ awake <dbl> 11.9, 7.0, 9.6, 9.1, 20.0, 9.6, 15.3, 17.0, 13.9, 21.0, 1… ## $ brainwt <dbl> NA, 0.01550, NA, 0.00029, 0.42300, NA, NA, NA, 0.07000, 0… ## $ bodywt <dbl> 50.000, 0.480, 1.350, 0.019, 600.000, 3.850, 20.490, 0.04… ## $ n <int> 83, 83, 83, 83, 83, 83, 83, 83, 83, 83, 83, 83, 83, 83, 8… Notice the new column called "n" that repeats the total number of samples for each row.

Or we can add a column that repeats the total hours of sleep of all the animals.

msleep %>% add_tally(sleep_total) %>% glimpse() ## Rows: 83 ## Columns: 12 ## $ name <chr> "Cheetah", "Owl monkey", "Mountain beaver", "Greater shor… ## $ genus <chr> "Acinonyx", "Aotus", "Aplodontia", "Blarina", "Bos", "Bra… ## $ vore <chr> "carni", "omni", "herbi", "omni", "herbi", "herbi", "carn… ## $ order <chr> "Carnivora", "Primates", "Rodentia", "Soricomorpha", "Art… ## $ conservation <chr> "lc", NA, "nt", "lc", "domesticated", NA, "vu", NA, "dome… ## $ sleep_total <dbl> 12.1, 17.0, 14.4, 14.9, 4.0, 14.4, 8.7, 7.0, 10.1, 3.0, 5… ## $ sleep_rem <dbl> NA, 1.8, 2.4, 2.3, 0.7, 2.2, 1.4, NA, 2.9, NA, 0.6, 0.8, … ## $ sleep_cycle <dbl> NA, NA, NA, 0.1333333, 0.6666667, 0.7666667, 0.3833333, N… ## $ awake <dbl> 11.9, 7.0, 9.6, 9.1, 20.0, 9.6, 15.3, 17.0, 13.9, 21.0, 1… ## $ brainwt <dbl> NA, 0.01550, NA, 0.00029, 0.42300, NA, NA, NA, 0.07000, 0… ## $ bodywt <dbl> 50.000, 0.480, 1.350, 0.019, 600.000, 3.850, 20.490, 0.04… ## $ n <dbl> 866, 866, 866, 866, 866, 866, 866, 866, 866, 866, 866, 86… count()

The count() function takes the tally() function a step further to determine the count of unique values for specified variable(s)/column(s).

## # A tibble: 5 × 2 ## vore n ## <chr> <int> ## 1 carni 19 ## 2 herbi 32 ## 3 insecti 5 ## 4 omni 20 ## 5 <NA> 7 This is the same as using group_by() with tally()

msleep %>% group_by(vore) %>% tally() ## # A tibble: 5 × 2 ## vore n ## <chr> <int> ## 1 carni 19 ## 2 herbi 32 ## 3 insecti 5 ## 4 omni 20 ## 5 <NA> 7 Multiple variables can be specified with count().

This can be really useful when getting to know your data.

msleep %>% count(vore, order) ## # A tibble: 32 × 3 ## vore order n ## <chr> <chr> <int> ## 1 carni Carnivora 12 ## 2 carni Cetacea 3 ## 3 carni Cingulata 1 ## 4 carni Didelphimorphia 1 ## 5 carni Primates 1 ## 6 carni Rodentia 1 ## 7 herbi Artiodactyla 5 ## 8 herbi Diprotodontia 1 ## 9 herbi Hyracoidea 2 ## 10 herbi Lagomorpha 1 ## # … with 22 more rows add_count()

The add_count() function is similar to the add_tally() function:

msleep %>% add_count(vore, order) %>% glimpse() ## Rows: 83 ## Columns: 12 ## $ name <chr> "Cheetah", "Owl monkey", "Mountain beaver", "Greater shor… ## $ genus <chr> "Acinonyx", "Aotus", "Aplodontia", "Blarina", "Bos", "Bra… ## $ vore <chr> "carni", "omni", "herbi", "omni", "herbi", "herbi", "carn… ## $ order <chr> "Carnivora", "Primates", "Rodentia", "Soricomorpha", "Art… ## $ conservation <chr> "lc", NA, "nt", "lc", "domesticated", NA, "vu", NA, "dome… ## $ sleep_total <dbl> 12.1, 17.0, 14.4, 14.9, 4.0, 14.4, 8.7, 7.0, 10.1, 3.0, 5… ## $ sleep_rem <dbl> NA, 1.8, 2.4, 2.3, 0.7, 2.2, 1.4, NA, 2.9, NA, 0.6, 0.8, … ## $ sleep_cycle <dbl> NA, NA, NA, 0.1333333, 0.6666667, 0.7666667, 0.3833333, N… ## $ awake <dbl> 11.9, 7.0, 9.6, 9.1, 20.0, 9.6, 15.3, 17.0, 13.9, 21.0, 1… ## $ brainwt <dbl> NA, 0.01550, NA, 0.00029, 0.42300, NA, NA, NA, 0.07000, 0… ## $ bodywt <dbl> 50.000, 0.480, 1.350, 0.019, 600.000, 3.850, 20.490, 0.04… ## $ n <int> 12, 10, 16, 3, 5, 1, 12, 3, 12, 5, 5, 16, 10, 16, 3, 2, 3… get_dupes()

Another common issue in data wrangling is the presence of duplicate entries. Sometimes you expect multiple observations from the same individual in your dataset. Other times, the information has accidentally been added more than once. The get_dupes() function becomes very helpful in this situation. If you want to identify duplicate entries during data wrangling, you'll use this function and specify which columns you're looking for duplicates in.

For example, in the msleep dataset, if you expected to only have one mammal representing each genus and vore you could double check this using get_dupes().

# identify observations that match in both genus and vore msleep %>% get_dupes(genus, vore) ## # A tibble: 10 × 12 ## genus vore dupe_count name order conservation sleep_total sleep_rem ## <chr> <chr> <int> <chr> <chr> <chr> <dbl> <dbl> ## 1 Equus herbi 2 Horse Peri… domesticated 2.9 0.6 ## 2 Equus herbi 2 Donkey Peri… domesticated 3.1 0.4 ## 3 Panthera carni 3 Tiger Carn… en 15.8 NA ## 4 Panthera carni 3 Jaguar Carn… nt 10.4 NA ## 5 Panthera carni 3 Lion Carn… vu 13.5 NA ## 6 Spermophilus herbi 3 Arcti… Rode… lc 16.6 NA ## 7 Spermophilus herbi 3 Thirt… Rode… lc 13.8 3.4 ## 8 Spermophilus herbi 3 Golde… Rode… lc 15.9 3 ## 9 Vulpes carni 2 Arcti… Carn… <NA> 12.5 NA ## 10 Vulpes carni 2 Red f… Carn… <NA> 9.8 2.4 ## # … with 4 more variables: sleep_cycle <dbl>, awake <dbl>, brainwt <dbl>, ## # bodywt <dbl> The output demonstrates there are 10 mammals that overlap in their genus and vore. Note that the third column of the output counts how many duplicate observations there are. This can be very helpful when you're checking your data!

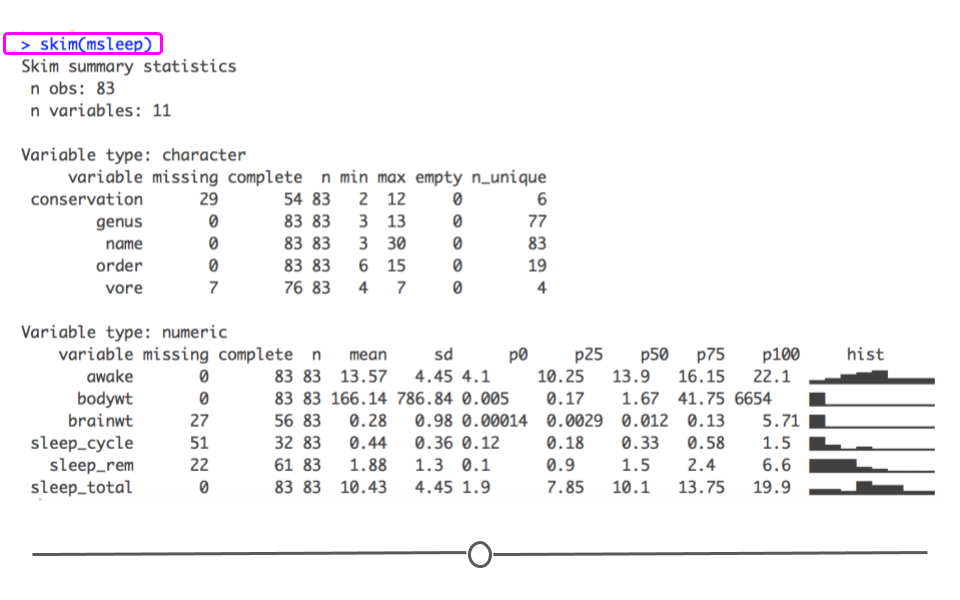

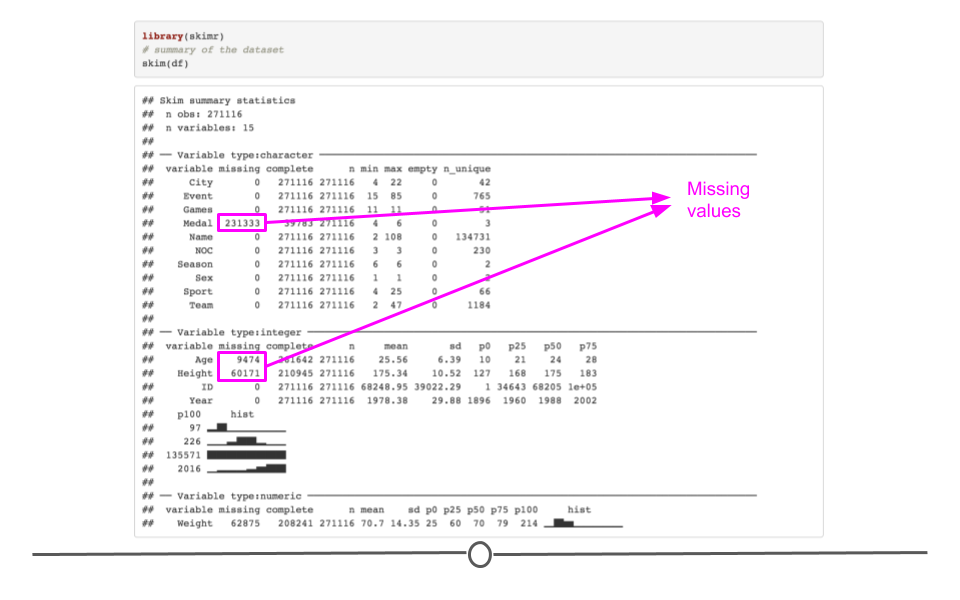

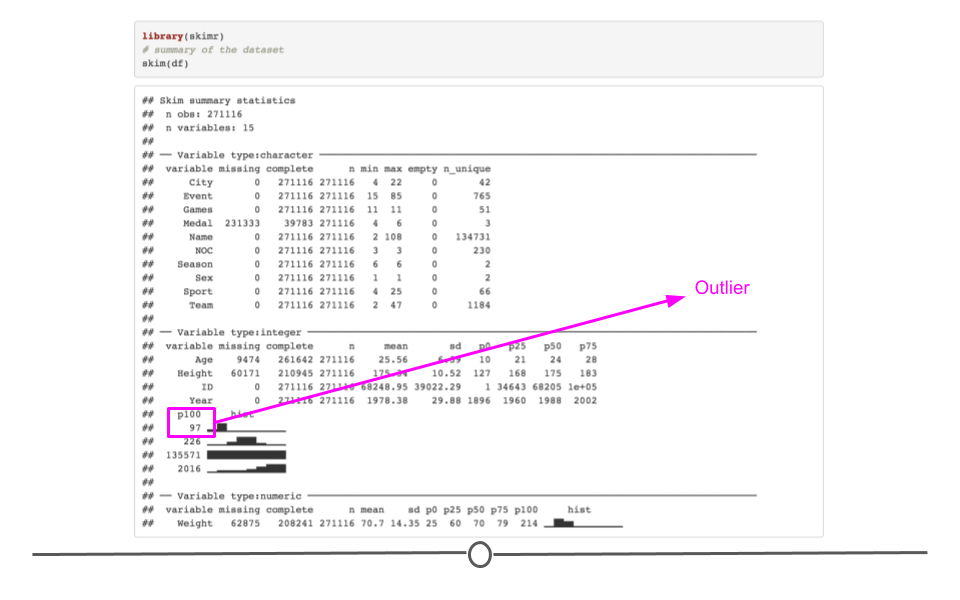

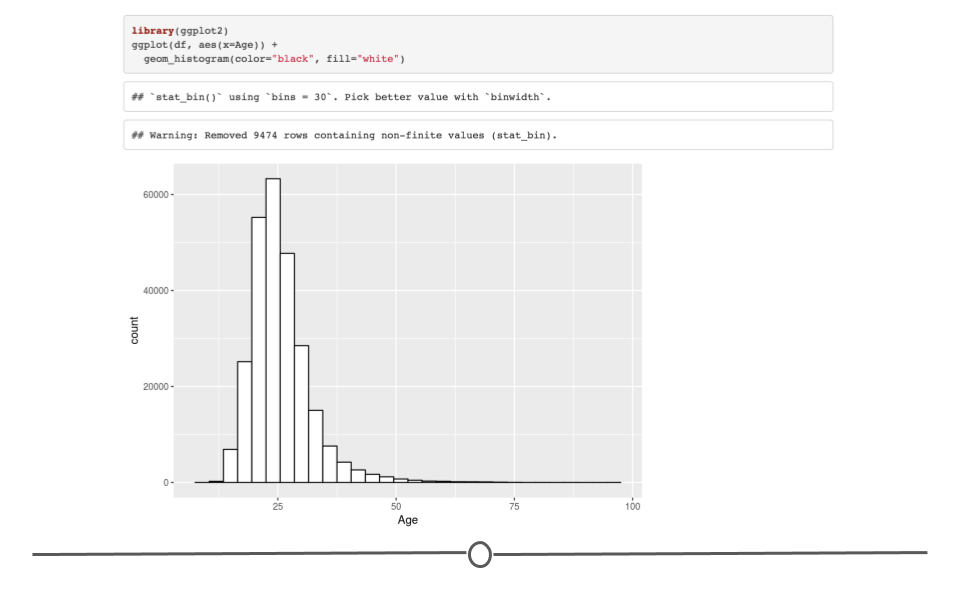

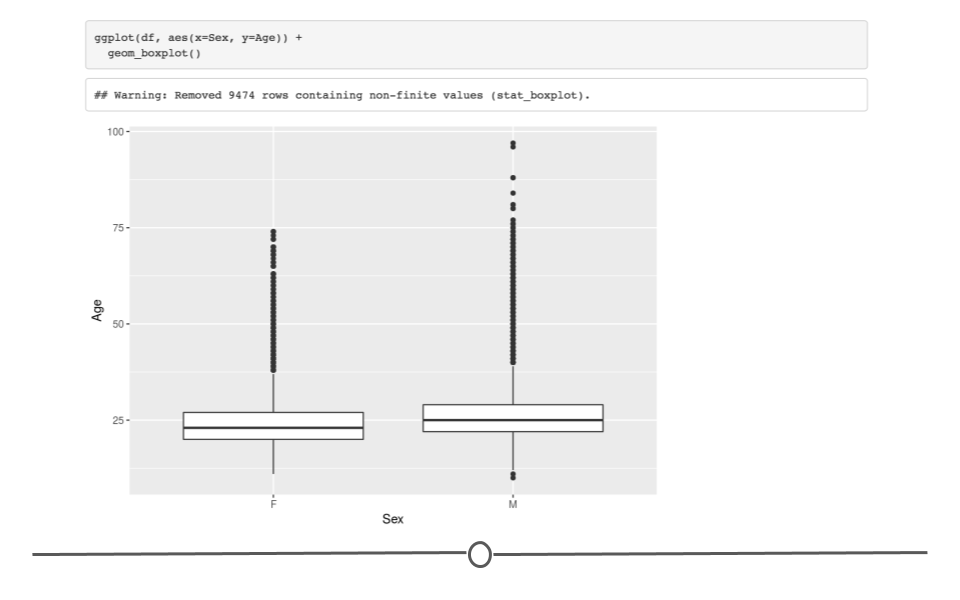

skim()

When you would rather get a snapshot of the entire dataset, rather than just one variable, the skim() function from the skimr package can be very helpful. The output from skim() breaks the data up by variable type. For example, the msleep dataset is broken up into character and numeric variable types. The data are then summarized in a meaningful way for each. This function provides a lot of information about the entire dataset. So, when you want a summarize a dataset and quickly get a sense of your data, skim() is a great option!

# summarize dataset skim(msleep) | Name | msleep |

| Number of rows | 83 |

| Number of columns | 11 |

| _______________________ | |

| Column type frequency: | |

| character | 5 |

| numeric | 6 |

| ________________________ | |

| Group variables | None |

Variable type: character

| skim_variable | n_missing | complete_rate | min | max | empty | n_unique | whitespace |

|---|---|---|---|---|---|---|---|

| name | 0 | 1.00 | 3 | 30 | 0 | 83 | 0 |

| genus | 0 | 1.00 | 3 | 13 | 0 | 77 | 0 |

| vore | 7 | 0.92 | 4 | 7 | 0 | 4 | 0 |

| order | 0 | 1.00 | 6 | 15 | 0 | 19 | 0 |

| conservation | 29 | 0.65 | 2 | 12 | 0 | 6 | 0 |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|

| sleep_total | 0 | 1.00 | 10.43 | 4.45 | 1.90 | 7.85 | 10.10 | 13.75 | 19.90 | ▅▅▇▆▂ |

| sleep_rem | 22 | 0.73 | 1.88 | 1.30 | 0.10 | 0.90 | 1.50 | 2.40 | 6.60 | ▇▆▂▁▁ |

| sleep_cycle | 51 | 0.39 | 0.44 | 0.36 | 0.12 | 0.18 | 0.33 | 0.58 | 1.50 | ▇▂▁▁▁ |

| awake | 0 | 1.00 | 13.57 | 4.45 | 4.10 | 10.25 | 13.90 | 16.15 | 22.10 | ▂▅▇▃▅ |

| brainwt | 27 | 0.67 | 0.28 | 0.98 | 0.00 | 0.00 | 0.01 | 0.13 | 5.71 | ▇▁▁▁▁ |

| bodywt | 0 | 1.00 | 166.14 | 786.84 | 0.00 | 0.17 | 1.67 | 41.75 | 6654.00 | ▇▁▁▁▁ |

summarize entire dataset using skim() from skimr

Note that this function allows for you to specify which columns you'd like to summarize, if you're not interested in seeing a summary of the entire dataset:

# see summary for specified columns skim(msleep, genus, vore, sleep_total) | Name | msleep |

| Number of rows | 83 |

| Number of columns | 11 |

| _______________________ | |

| Column type frequency: | |

| character | 2 |

| numeric | 1 |

| ________________________ | |

| Group variables | None |

Variable type: character

| skim_variable | n_missing | complete_rate | min | max | empty | n_unique | whitespace |

|---|---|---|---|---|---|---|---|

| genus | 0 | 1.00 | 3 | 13 | 0 | 77 | 0 |

| vore | 7 | 0.92 | 4 | 7 | 0 | 4 | 0 |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|

| sleep_total | 0 | 1 | 10.43 | 4.45 | 1.9 | 7.85 | 10.1 | 13.75 | 19.9 | ▅▅▇▆▂ |

It is also possible to group data (using dplyr's group_by()) before summarizing. Notice in the summary output that each variable specified (genus and sleep_total) are now broken down within each of the vore categories.

msleep %>% group_by(vore) %>% skim(genus, sleep_total) | Name | Piped data |

| Number of rows | 83 |

| Number of columns | 11 |

| _______________________ | |

| Column type frequency: | |

| character | 1 |

| numeric | 1 |

| ________________________ | |

| Group variables | vore |

Variable type: character

| skim_variable | vore | n_missing | complete_rate | min | max | empty | n_unique | whitespace |

|---|---|---|---|---|---|---|---|---|

| genus | carni | 0 | 1 | 5 | 13 | 0 | 16 | 0 |

| genus | herbi | 0 | 1 | 3 | 12 | 0 | 29 | 0 |

| genus | insecti | 0 | 1 | 6 | 12 | 0 | 5 | 0 |

| genus | omni | 0 | 1 | 3 | 13 | 0 | 20 | 0 |

| genus | NA | 0 | 1 | 6 | 11 | 0 | 7 | 0 |

Variable type: numeric

| skim_variable | vore | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|---|

| sleep_total | carni | 0 | 1 | 10.38 | 4.67 | 2.7 | 6.25 | 10.4 | 13.00 | 19.4 | ▅▃▇▃▂ |

| sleep_total | herbi | 0 | 1 | 9.51 | 4.88 | 1.9 | 4.30 | 10.3 | 14.22 | 16.6 | ▇▃▂▅▇ |

| sleep_total | insecti | 0 | 1 | 14.94 | 5.92 | 8.4 | 8.60 | 18.1 | 19.70 | 19.9 | ▅▁▁▁▇ |

| sleep_total | omni | 0 | 1 | 10.93 | 2.95 | 8.0 | 9.10 | 9.9 | 10.93 | 18.0 | ▇▃▁▂▂ |

| sleep_total | NA | 0 | 1 | 10.19 | 3.00 | 5.4 | 8.65 | 10.6 | 12.15 | 13.7 | ▇▁▃▇▇ |

summary()

While base R has a summary() function, this can be combined with the skimr package to provide you with a quick summary of the dataset at large.

skim(msleep) %>% summary() | Name | msleep |

| Number of rows | 83 |

| Number of columns | 11 |

| _______________________ | |

| Column type frequency: | |

| character | 5 |

| numeric | 6 |

| ________________________ | |

| Group variables | None |

Operations Across Columns

Sometimes it is valuable to apply a certain operation across the columns of a data frame. For example, it be necessary to compute the mean or some other summary statistics for each column in the data frame. In some cases, these operations can be done by a combination of pivot_longer() along with group_by() and summarize(). However, in other cases it is more straightforward to simply compute the statistic on each column.

The across() function is needed to operate across the columns of a data frame. For example, in our airquality dataset, if we wanted to compute the mean of Ozone, Solar.R, Wind, and Temp, we could do:

airquality %>% summarize(across(Ozone:Temp, mean, na.rm = TRUE)) ## # A tibble: 1 × 4 ## Ozone Solar.R Wind Temp ## <dbl> <dbl> <dbl> <dbl> ## 1 42.1 186. 9.96 77.9 The across() function can be used in conjunction with the mutate() and filter() functions to construct joint operations across different columns of a data frame. For example, suppose we wanted to filter the rows of the airquality data frame so that we only retain rows that do not have missing values for Ozone and Solar.R. Generally, we might use the filter() function for this, as follows:

airquality %>% filter(! is.na(Ozone), ! is.na(Solar.R)) Because we are only filtering on two columns here, it's not too difficult to write out the expression. However, if we were filtering on many columns, it would become a challenge to write out every column. This is where the across() function comes in handy. With the across() function, we can specify columns in the same way that we use the select() function. This allows us to use short-hand notation to select a large set of columns.

We can use the across() function in conjunction with filter() to achieve the same result as above.

airquality %>% filter(across(Ozone:Solar.R, ~ ! is.na(.))) ## # A tibble: 111 × 6 ## Ozone Solar.R Wind Temp Month Day ## <int> <int> <dbl> <int> <int> <int> ## 1 41 190 7.4 67 5 1 ## 2 36 118 8 72 5 2 ## 3 12 149 12.6 74 5 3 ## 4 18 313 11.5 62 5 4 ## 5 23 299 8.6 65 5 7 ## 6 19 99 13.8 59 5 8 ## 7 8 19 20.1 61 5 9 ## 8 16 256 9.7 69 5 12 ## 9 11 290 9.2 66 5 13 ## 10 14 274 10.9 68 5 14 ## # … with 101 more rows Here, the ~ in the call to across() indicates that we are passing an anonymous function (see the section on Functional Programming for more details) and the . is a stand-in for the name of the column.

If we wanted to filter the data frame to remove rows with missing values in Ozone, Solar.R, Wind, and Temp, we only need to make a small change.

airquality %>% filter(across(Ozone:Temp, ~ ! is.na(.))) ## # A tibble: 111 × 6 ## Ozone Solar.R Wind Temp Month Day ## <int> <int> <dbl> <int> <int> <int> ## 1 41 190 7.4 67 5 1 ## 2 36 118 8 72 5 2 ## 3 12 149 12.6 74 5 3 ## 4 18 313 11.5 62 5 4 ## 5 23 299 8.6 65 5 7 ## 6 19 99 13.8 59 5 8 ## 7 8 19 20.1 61 5 9 ## 8 16 256 9.7 69 5 12 ## 9 11 290 9.2 66 5 13 ## 10 14 274 10.9 68 5 14 ## # … with 101 more rows The across() function can also be used with mutate() if we want to apply the same transformation to multiple columns. For example, suppose we want to cycle through each column and replace all missing values (NAs) with zeros. We could use across() to accomplish this.

airquality %>% mutate(across(Ozone:Temp, ~ replace_na(., 0))) ## # A tibble: 153 × 6 ## Ozone Solar.R Wind Temp Month Day ## <dbl> <dbl> <dbl> <dbl> <int> <int> ## 1 41 190 7.4 67 5 1 ## 2 36 118 8 72 5 2 ## 3 12 149 12.6 74 5 3 ## 4 18 313 11.5 62 5 4 ## 5 0 0 14.3 56 5 5 ## 6 28 0 14.9 66 5 6 ## 7 23 299 8.6 65 5 7 ## 8 19 99 13.8 59 5 8 ## 9 8 19 20.1 61 5 9 ## 10 0 194 8.6 69 5 10 ## # … with 143 more rows Again, the . is used as a stand-in for the name of the column. This expression essentially applies the replace_na() function to each of the columns between Ozone and Temp in the data frame.

Working With Factors

In R, categorical data are handled as factors. By definition, categorical data are limited in that they have a set number of possible values they can take. For example, there are 12 months in a calendar year. In a month variable, each observation is limited to taking one of these twelve values. Thus, with a limited number of possible values, month is a categorical variable. Categorical data, which will be referred to as factors for the rest of this lesson, are regularly found in data. Learning how to work with this type of variable effectively will be incredibly helpful.

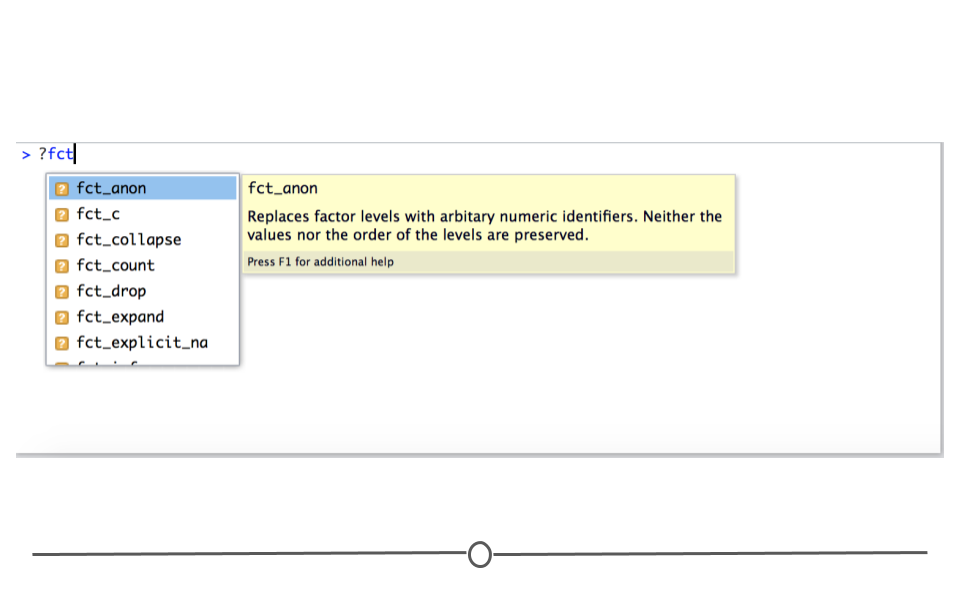

To make working with factors simpler, we'll utilize the forcats package, a core tidyverse package. All functions within forcats begin with fct_, making them easier to look up and remember. As before, to see available functions you can type ?fct_ in your RStudio console. A drop-down menu will appear with all the possible forcats functions.

fct_ output from RStudio

Factor Review

In R, factors are comprised of two components: the actual values of the data and the possible levels within the factor. Thus, to create a factor, you need to supply both these pieces of information.

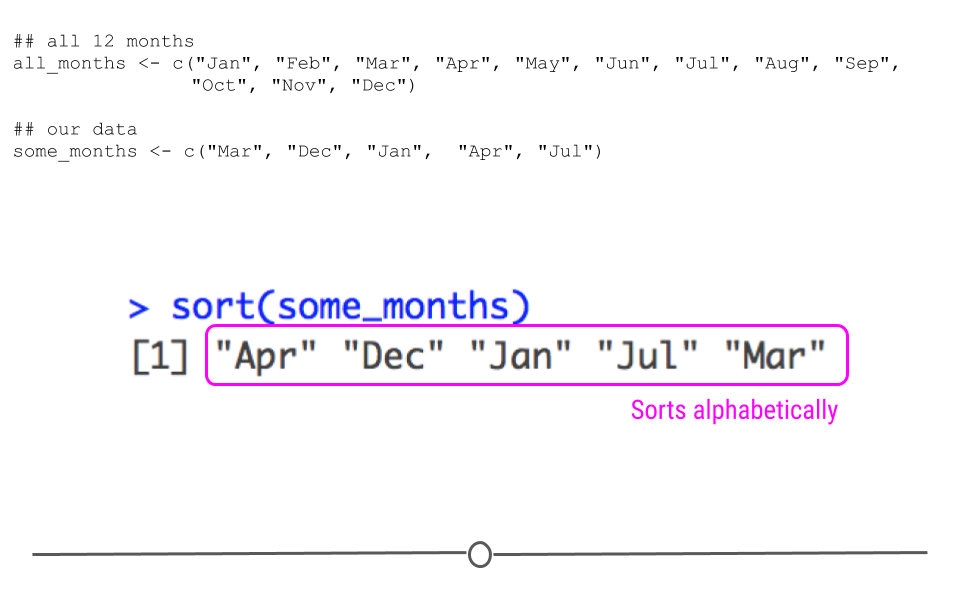

For example, if we were to create a character vector of the twelve months, we could certainly do that:

## all 12 months all_months <- c("Jan", "Feb", "Mar", "Apr", "May", "Jun", "Jul", "Aug", "Sep", "Oct", "Nov", "Dec") ## our data some_months <- c("Mar", "Dec", "Jan", "Apr", "Jul") However, if we were to sort this vector, R would sort this vector alphabetically.

# alphabetical sort sort(some_months) ## [1] "Apr" "Dec" "Jan" "Jul" "Mar"

sort sorts variable alphabetically

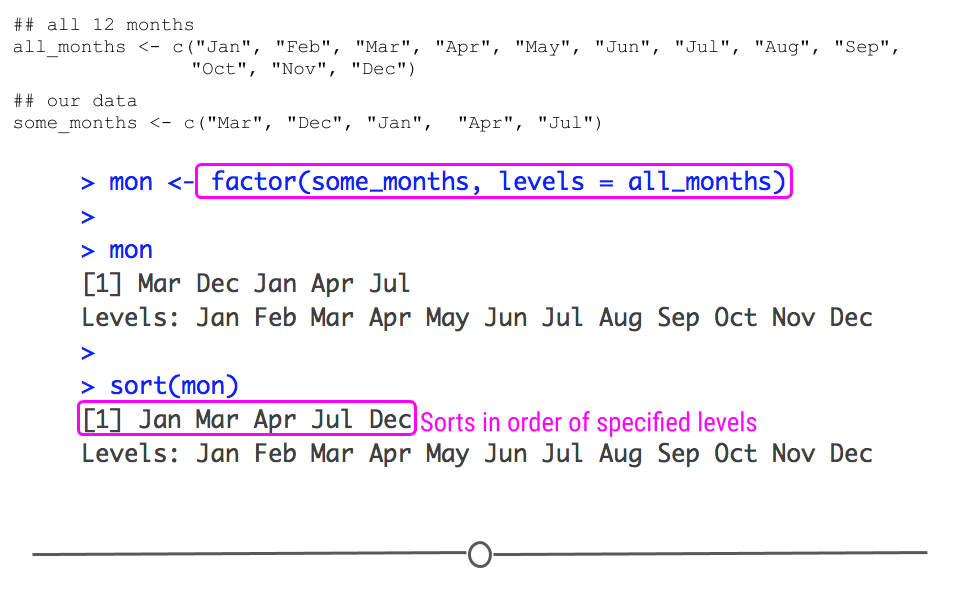

While you and I know that this is not how months should be ordered, we haven't yet told R that. To do so, we need to let R know that it's a factor variable and what the levels of that factor variable should be.

# create factor mon <- factor(some_months, levels = all_months) # look at factor mon ## [1] Mar Dec Jan Apr Jul ## Levels: Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec # look at sorted factor sort(mon) ## [1] Jan Mar Apr Jul Dec ## Levels: Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec

defining the factor levels sorts this variable sensibly

Here, we specify all the possible values that the factor could take in the levels = all_months argument. So, even though not all twelve months are included in the some_months object, we've stated that all of the months are possible values. Further, when you sort this variable, it now sorts in the sensical way!

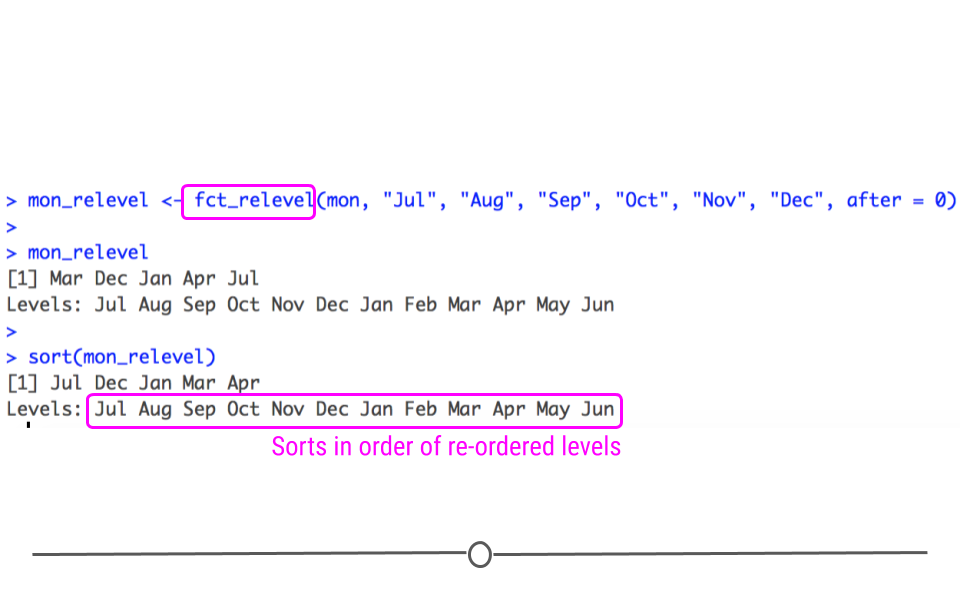

Manually Changing the Labels of Factor Levels: fct_relevel()

What if you wanted your months to start with July first? That can be accomplished using fct_relevel(). To use this function, you simply need to state what you'd like to relevel (mon) followed by the levels you want to relevel. If you want these to be placed in the beginning, the after argument should be after = 0. You can play around with this setting to see how changing after affects the levels in your output.

mon_relevel <- fct_relevel(mon, "Jul", "Aug", "Sep", "Oct", "Nov", "Dec", after = 0) # releveled mon_relevel ## [1] Mar Dec Jan Apr Jul ## Levels: Jul Aug Sep Oct Nov Dec Jan Feb Mar Apr May Jun # releleveld and sorted sort(mon_relevel) ## [1] Jul Dec Jan Mar Apr ## Levels: Jul Aug Sep Oct Nov Dec Jan Feb Mar Apr May Jun

fct_relevel enables you to change the order of your factor levels

After re-leveling, when we sort this factor, we see that Jul is placed first, as specified by the level re-ordering.

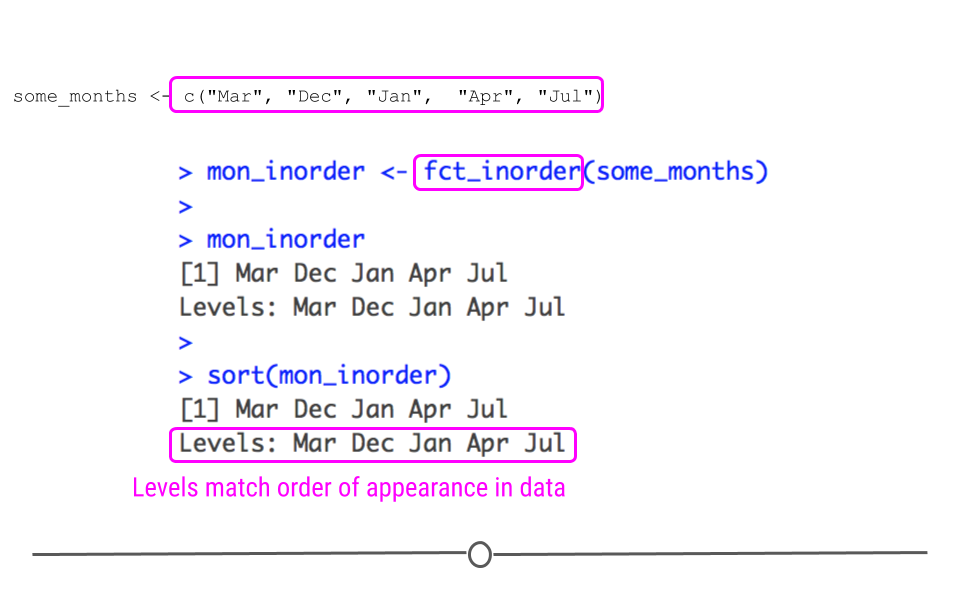

Keeping the Order of the Factor Levels: fct_inorder()

Now, if you're not interested in the months being in calendar year order, you can always state that you want the levels to stay in the same order as the data you started with, you simply specify with fct_inorder().

# keep order of appearance mon_inorder <- fct_inorder(some_months) # output mon_inorder ## [1] Mar Dec Jan Apr Jul ## Levels: Mar Dec Jan Apr Jul # sorted sort(mon_inorder) ## [1] Mar Dec Jan Apr Jul ## Levels: Mar Dec Jan Apr Jul

fct_inorder() assigns levels in the same order the level is seen in the data

We see now with fct_inorder() that even when we sort the output, it does not sort the factor alphabetically, nor does it put it in calendar order. In fact, it stays in the same order as the input, just as we specified.

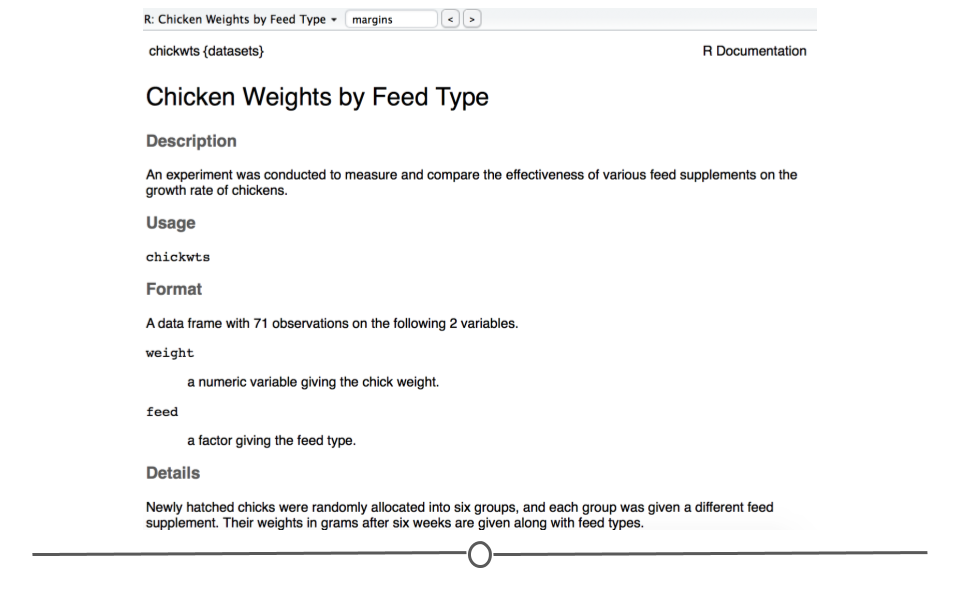

Advanced Factoring

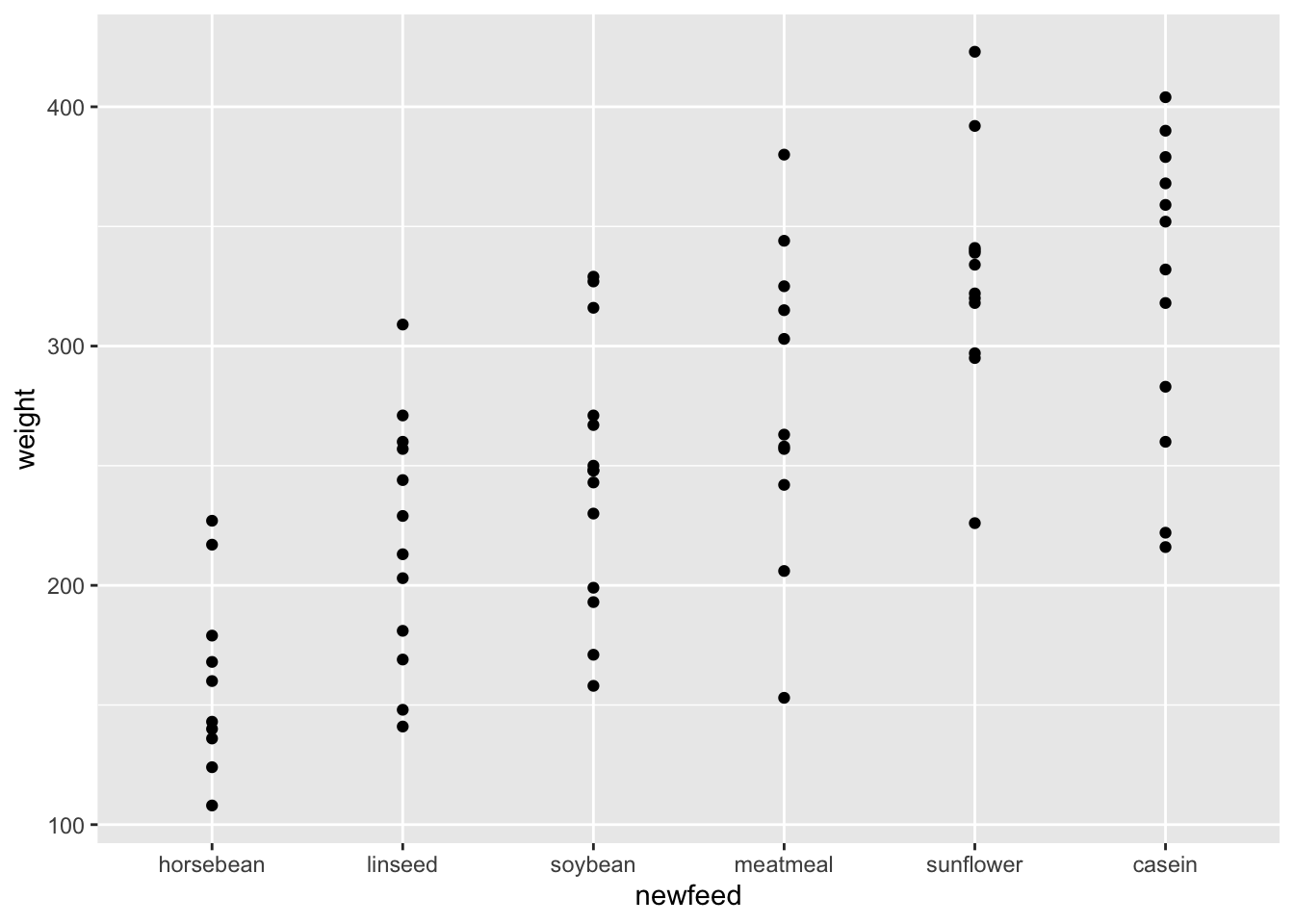

For the remainder of this lesson, we're going to return to using a dataset that's in R by default. We'll use the chickwts dataset for exploring the remaining advanced functions. This dataset includes data from an experiment that was looking to compare the "effectiveness of various feed supplements on the growth rate of chickens."

chickwts dataset

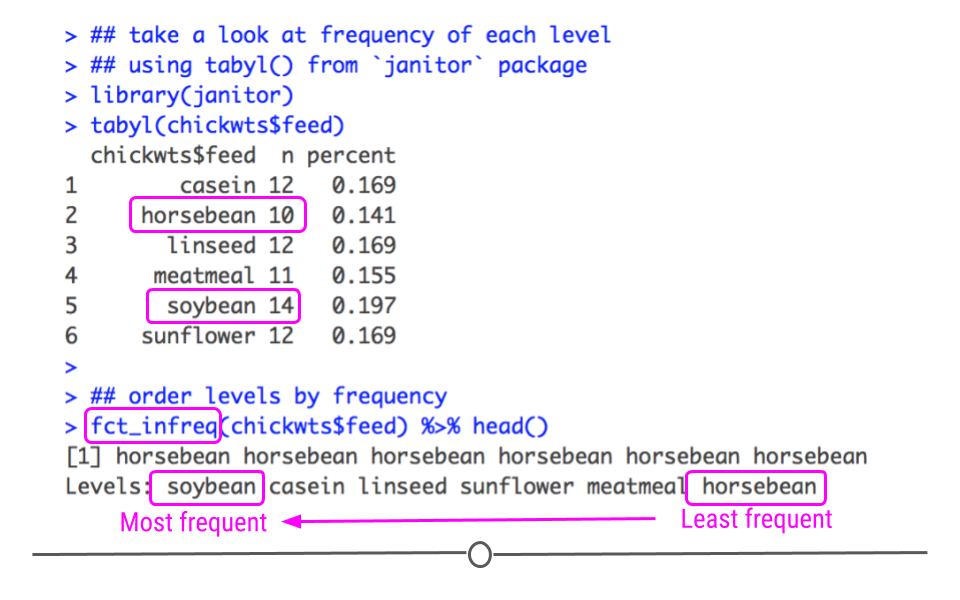

Re-ordering Factor Levels by Frequency: fct_infreq()

To re-order factor levels by frequency of the value in the dataset, you'll want to use fct_infreq(). Below, we see from the output from tabyl() that 'soybean' is the most frequent feed in the dataset while 'horsebean' is the least frequent. Thus, when we order by frequency, we can expect these two feeds to be at opposite ends for our levels.

## take a look at frequency of each level ## using tabyl() from `janitor` package tabyl(chickwts$feed) ## chickwts$feed n percent ## casein 12 0.1690141 ## horsebean 10 0.1408451 ## linseed 12 0.1690141 ## meatmeal 11 0.1549296 ## soybean 14 0.1971831 ## sunflower 12 0.1690141 ## order levels by frequency fct_infreq(chickwts$feed) %>% head() ## [1] horsebean horsebean horsebean horsebean horsebean horsebean ## Levels: soybean casein linseed sunflower meatmeal horsebean

fct_infreq orders levels based on frequency in dataset

As expected, soybean, the most frequent level, appears as the first level and horsebean, the least frequent level, appears last. The rest of the levels are sorted by frequency.

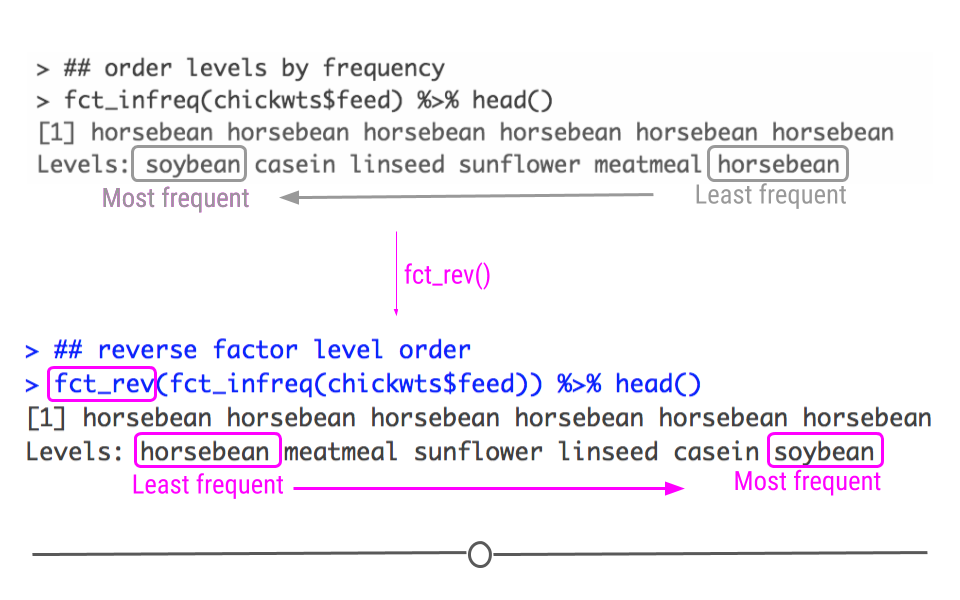

Reversing Order Levels: fct_rev()

If we wanted to sort the levels from least frequent to most frequent, we could just put fct_rev() around the code we just used to reverse the factor level order.

## reverse factor level order fct_rev(fct_infreq(chickwts$feed)) %>% head() ## [1] horsebean horsebean horsebean horsebean horsebean horsebean ## Levels: horsebean meatmeal sunflower linseed casein soybean

fct_rev() reverses the factor level order

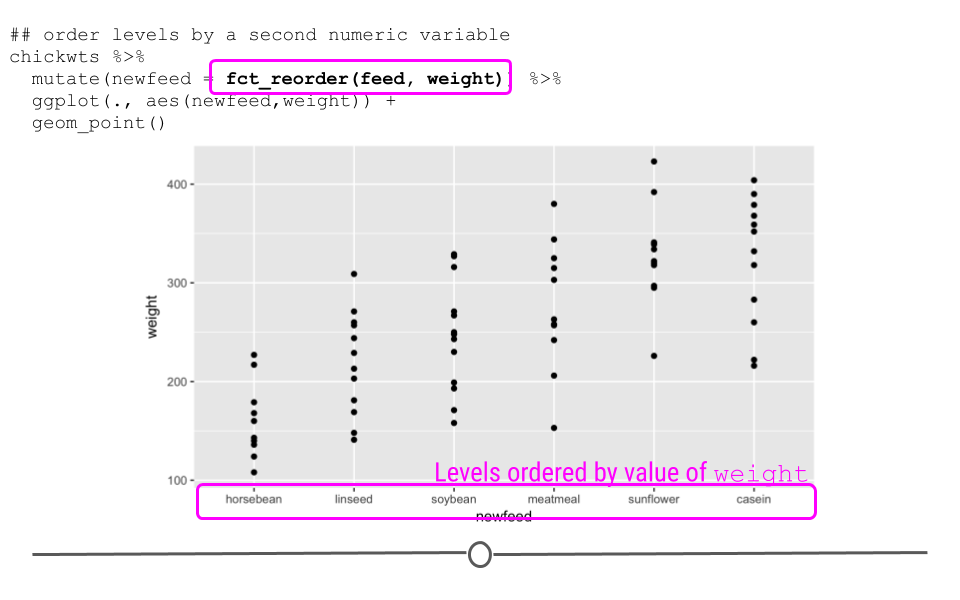

Re-ordering Factor Levels by Another Variable: fct_reorder()

At times you may want to reorder levels of a factor by another variable in your dataset. This is often helpful when generating plots (which we'll get to in a future lesson!). To do this you specify the variable you want to reorder, followed by the numeric variable by which you'd like the factor to be re-leveled. Here, we see that we're re-leveling feed by the weight of the chickens. While we haven't discussed plotting yet, the best way to demonstrate how this works is by plotting the feed against the weights. We can see that the order of the factor is such that those chickens with the lowest median weight (horsebean) are to the left, while those with the highest median weight (casein) are to the right.

## order levels by a second numeric variable chickwts %>% mutate(newfeed = fct_reorder(feed, weight)) %>% ggplot(., aes(newfeed,weight)) + geom_point()

fct_reorder allows you to re-level a factor based on a secondary numeric variable

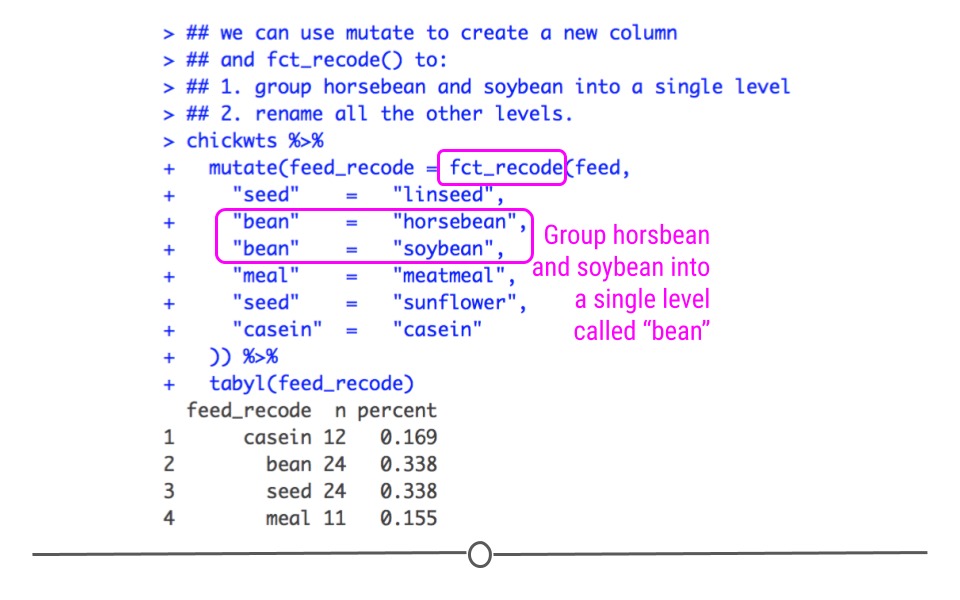

Combining Several Levels into One: fct_recode()

To demonstrate how to combine several factor levels into a single level, we'll continue to use our 'chickwts' dataset. Now, I don't know much about chicken feed, and there's a good chance you know a lot more. However, let's assume (even if it doesn't make good sense with regards to chicken feed) you wanted to combine all the feeds with the name "bean" in it to a single category and you wanted to combine "linseed" and "sunflower"" into the category "seed." This can be simply accomplished with fct_recode. In fact, below, you see we can rename all the levels to a simpler term (the values on the left side of the equals sign) by re-naming the original level names (the right side of the equals sign). This code will create a new column, called feed_recode (accomplished with mutate()). This new column will combine "horsebean" and "soybean feeds," grouping them both into the larger level "bean." It will similarly group "sunflower" and "linseed" into the larger level "seed." All other feed types will also be renamed. When we look at the summary of this new column by using tabyl(), we see that all of the feeds have been recoded, just as we specified! We now have four different feed types, rather than the original six.

## we can use mutate to create a new column ## and fct_recode() to: ## 1. group horsebean and soybean into a single level ## 2. rename all the other levels. chickwts %>% mutate(feed_recode = fct_recode(feed, "seed" = "linseed", "bean" = "horsebean", "bean" = "soybean", "meal" = "meatmeal", "seed" = "sunflower", "casein" = "casein" )) %>% tabyl(feed_recode) ## feed_recode n percent ## casein 12 0.1690141 ## bean 24 0.3380282 ## seed 24 0.3380282 ## meal 11 0.1549296

fct_recode() can be used to group multiple levels into a single level and/or to rename levels

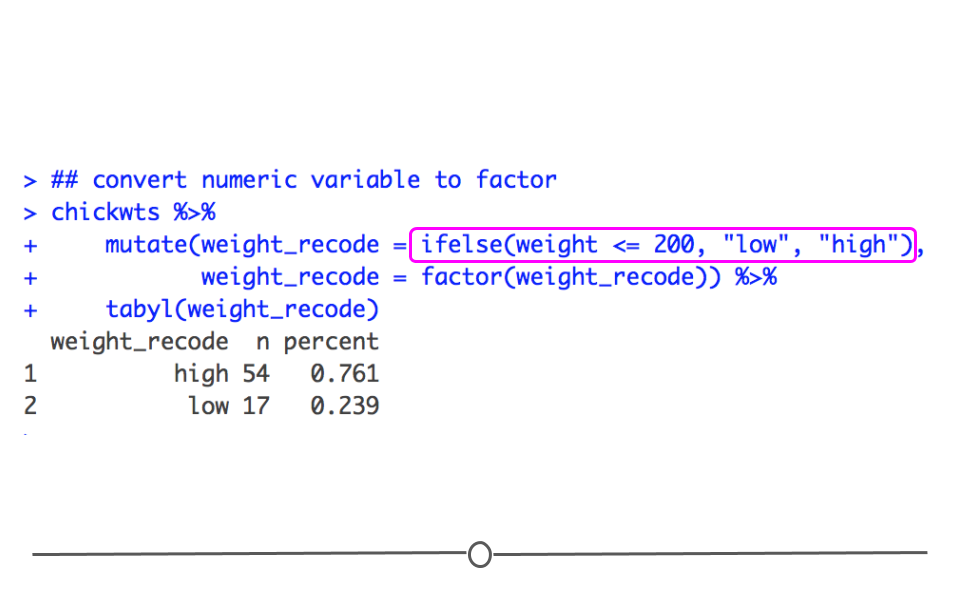

Converting Numeric Levels to Factors: ifelse() + factor()

Finally, when working with factors, there are times when you want to convert a numeric variable into a factor. For example, if you were talking about a dataset with BMI for a number of individuals, you may want to categorize people based on whether or not they are underweight (BMI < 18.5), of a healthy weight (BMI between 18.5 and 29.9), or obese (BMI >= 30). When you want to take a numeric variable and turn it into a categorical factor variable, you can accomplish this easily by using ifelse() statements. Within a single statement we provide R with a condition: weight <= 200. With this, we are stating that the condition is if a chicken's weight is less than or equal to 200 grams. Then, if that condition is true, meaning if a chicken's weight is less than or equal to 200 grams, let's assign that chicken to the category low. Otherwise, and this is the else{} part of the ifelse() function, assign that chicken to the category high. Finally, we have to let R know that weight_recode is a factor variable, so we call factor() on this new column. This way we take a numeric variable (weight), and turn it into a factor variable (weight_recode).

## convert numeric variable to factor chickwts %>% mutate(weight_recode = ifelse(weight <= 200, "low", "high"), weight_recode = factor(weight_recode)) %>% tabyl(weight_recode) ## weight_recode n percent ## high 54 0.7605634 ## low 17 0.2394366

converting a numeric type variable to a factor

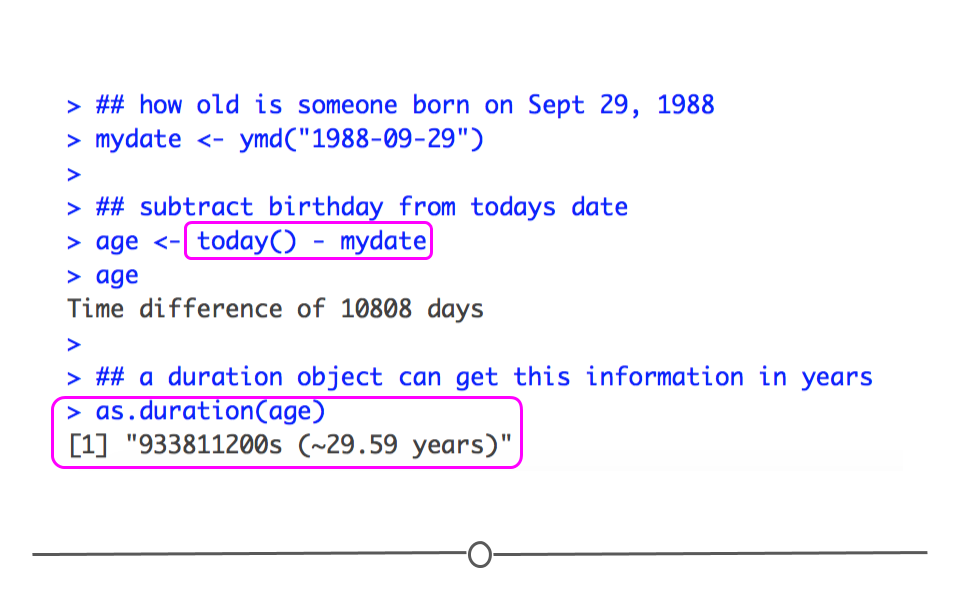

Working With Dates and Times

In earlier lessons, you were introduced to different types of objects in R, such as characters and numeric. Then we covered how to work with factors in detail. A remaining type of variable we haven't yet covered is how to work with dates and time in R.

As with strings and factors, there is a tidyverse package to help you work with dates more easily. The lubridate package is not part of the core tidyverse packages, so it will have to be loaded individually. This package will make working with dates and times easier. Before working through this lesson, you'll want to be sure that lubridate has been installed and loaded in:

#install.packages('lubridate') library(lubridate) Dates and Times Basics

When working with dates and times in R, you can consider either dates, times, or date-times. Date-times refer to dates plus times, specifying an exact moment in time. It's always best to work with the simplest possible object for your needs. So, if you don't need to refer to date-times specifically, it's best to work with dates.

Creating Dates and Date-Time Objects

To get objects into dates and date-times that can be more easily worked with in R, you'll want to get comfortable with a number of functions from the lubridate package. Below we'll discuss how to create date and date-time objects from (1) strings and (2) individual parts.

From strings

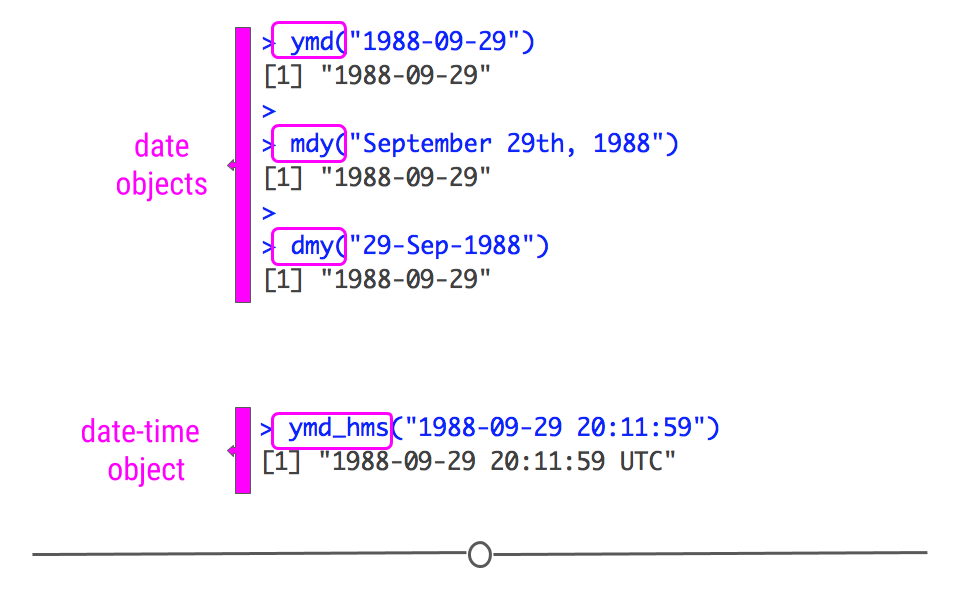

Date information is often provided as a string. The functions within the lubridate package can effectively handle this information. To use them to generate date objects, you can call a function using y, m, and d in the order in which the year (y), month (m), and date (d) appear in your data. The code below produces identical output for the date September 29th, 1988, despite the three distinct input formats. This uniform output makes working with dates much easier in R.

# year-month-date ymd("1988-09-29") ## [1] "1988-09-29" #month-day-year mdy("September 29th, 1988") ## [1] "1988-09-29" #day-month-year dmy("29-Sep-1988") ## [1] "1988-09-29"

creating date and date-time objects

However, this has only covered working with date objects. To work with date-time objects, you have to further include hour (h), minute(m), and second (s) into the function. For example, in the code below, you can see that the output contains time information in addition to the date information generated in the functions above:

ymd_hms("1988-09-29 20:11:59") ## [1] "1988-09-29 20:11:59 UTC" From individual parts

If you have a dataset where month, date, year, and/or time information are included in separate columns, the functions within lubridate can take this separate information and create a date or date-time object. To work through examples using the functions make_date() and make_timedate(), we'll use a dataset called nycflights13. As this dataset is not included with the R by default, you'll have to install and load it in directly:

#install.packages('nycflights13') library(nycflights13) Loading this package makes a data frame called flights, which includes "on-time data for all flights that departed NYC in 2013," available. We will work with this dataset to demonstrate how to create a date and date-time object from a dataset where the information is spread across multiple columns.

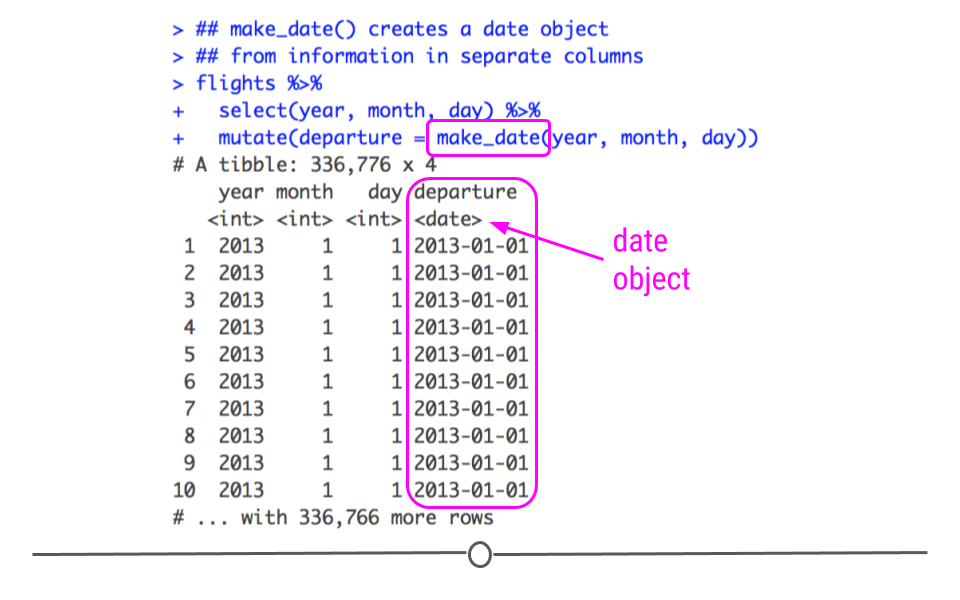

First, to create a new column, as we've done throughout the lessons in this course, we will use mutate(). To create a date object, we'll use the function make_date(). We just then need to supply the names of the columns containing the year, month, and day information to this function.

## make_date() creates a date object ## from information in separate columns flights %>% select(year, month, day) %>% mutate(departure = make_date(year, month, day)) ## # A tibble: 336,776 × 4 ## year month day departure ## <int> <int> <int> <date> ## 1 2013 1 1 2013-01-01 ## 2 2013 1 1 2013-01-01 ## 3 2013 1 1 2013-01-01 ## 4 2013 1 1 2013-01-01 ## 5 2013 1 1 2013-01-01 ## 6 2013 1 1 2013-01-01 ## 7 2013 1 1 2013-01-01 ## 8 2013 1 1 2013-01-01 ## 9 2013 1 1 2013-01-01 ## 10 2013 1 1 2013-01-01 ## # … with 336,766 more rows

mutate() and make_date() create a new column – departure – with a date object

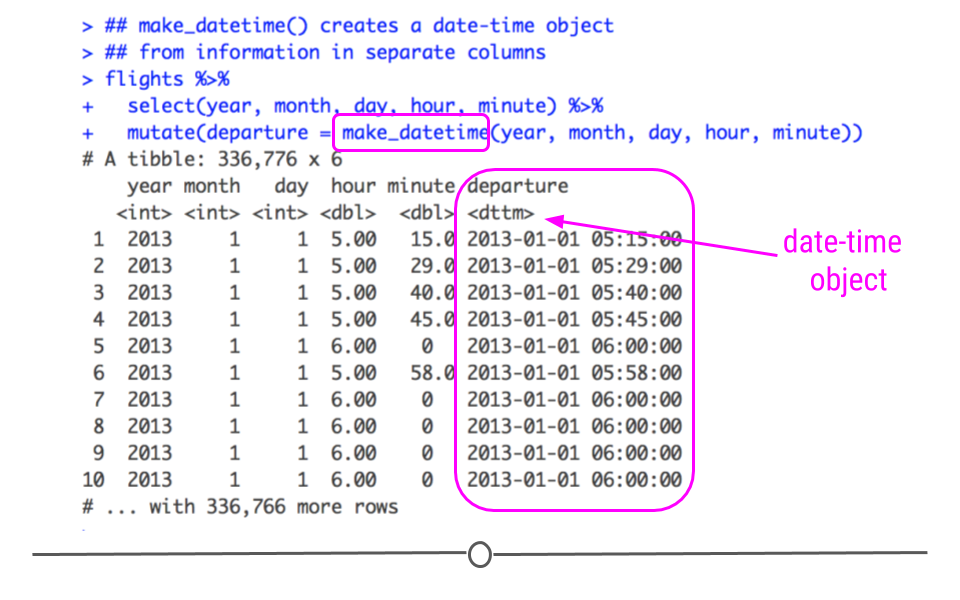

A similar procedure is used to create a date-time object; however, this requires the function make_datetime() and requires columns with information about time be specified. Below, hour and minute are included to the function's input.

## make_datetime() creates a date-time object ## from information in separate columns flights %>% select(year, month, day, hour, minute) %>% mutate(departure = make_datetime(year, month, day, hour, minute)) ## # A tibble: 336,776 × 6 ## year month day hour minute departure ## <int> <int> <int> <dbl> <dbl> <dttm> ## 1 2013 1 1 5 15 2013-01-01 05:15:00 ## 2 2013 1 1 5 29 2013-01-01 05:29:00 ## 3 2013 1 1 5 40 2013-01-01 05:40:00 ## 4 2013 1 1 5 45 2013-01-01 05:45:00 ## 5 2013 1 1 6 0 2013-01-01 06:00:00 ## 6 2013 1 1 5 58 2013-01-01 05:58:00 ## 7 2013 1 1 6 0 2013-01-01 06:00:00 ## 8 2013 1 1 6 0 2013-01-01 06:00:00 ## 9 2013 1 1 6 0 2013-01-01 06:00:00 ## 10 2013 1 1 6 0 2013-01-01 06:00:00 ## # … with 336,766 more rows

mutate() and make_datetime() create a new column – departure – with a date-time object

Working with Dates

The reason we've dedicated an entire lesson to working with dates and have shown you how to create date and date-time objects in this lesson is because you often want to plot data over time or calculate how long something has taken. Being able to accomplish these tasks is an important job for a data scientist. So, now that you know how to create date and date-time objects, we'll work through a few examples of how to work with these objects.

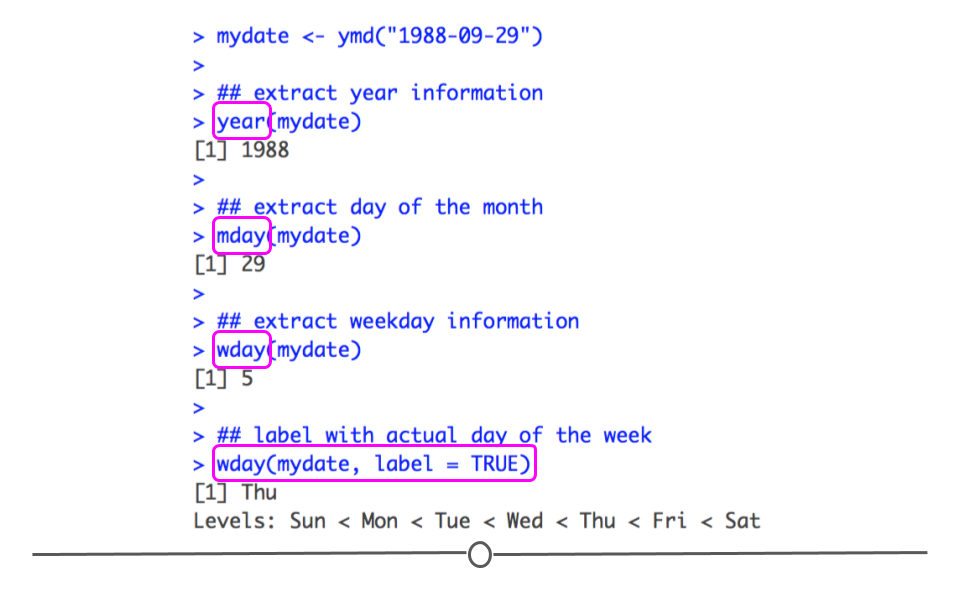

Getting components of dates

Often you're most interested in grouping your data by year, or just looking at monthly or weekly trends. To accomplish this, you have to be able to extract just a component of your date object. You can do this with the functions: year(), month(), mday(),wday(), hour(), minute() and second(). Each will extract the specified piece of information from the date or date-time object.